What Is an Artificial Neural Network?

An intuitive introduction to artificial neural networks.

Neural networks have received a lot of hype in recent years, and for good reason. With a basic understanding of this deep learning theory, we can create technology that solves complex problems with human, and sometimes superhuman, capabilities. Whether it be advanced signal processing, object detection, intelligent decision making, or time series analysis, neural networks are a great way to add intelligence to your projects. Before I begin explaining the details of a neural network, let me tell a short story that should give some inspiration as to why artificial neural networks were created in the first place. If you just want to jump straight into the details of neural networks then skip to the “Modeling the biological neuron” section.

The tale of the investor, the mathematician, and the neuroscientist

Say there is a real estate investor that has data on thousands of properties around the world. This data includes each property's location, location crime rate, property square foot, and population. He also has the price that each property was sold for. He wants to find the relationship between the data for each property and the price the property was sold for. If he finds this relationship, he can apply it to a new house that isn’t in his data and find out the price it will sell at. Then he can easily find houses that are going for cheaper than what he can resell them for.

Okay, so that’s the aspirations of a real estate investor. Unfortunately for him, he was never any good at math. So, with riches on his mind, he finds a mathematician to solve his problem. Let’s look at this problem from the mathematician’s perspective.

To the mathematician, we have a series of inputs and outputs of some function. The inputs being the property's location, location crime rate, property square foot, and population. The output is the price the property is sold at. This function is the “relationship” that the investor was referring to, but what the mathematician sees is:

As the mathematician works tirelessly to find this function, the investor grows impatient. And after a couple of days, he loses his patients and becomes business partners with a more experienced investor instead of waiting on the mathematician. This more experienced investor is so good at what he does that he can show up to the property and give a great estimate of what the property can sell for without even using grade school math.

The mathematician, embarrassed, gives up on manually finding this function. Defeated, he asks himself, “How is this problem so difficult to solve using mathematics, but so intuitive to the experienced investor?”. Unable to answer this question he finds a neuroscientist in hopes to understand how the investor's brain solves the problem.

The neuroscientist, unable to answer exactly how the investor is estimating prices so well, teaches the mathematician how the neurons in the brain work. The mathematician then models these neurons mathematically. After years and years of hard work this mathematician finally finds out how he can find the relationship between any 2 related things, including the details of a property and the price it will sell at. He then uses this information to make a hundred million dollars and lives happily ever after.

Modeling the biological neuron

As we have seen from the story above, if we want to model things that are so intuitive to humans but incredibly complicated to model mathematically we must look at how the human brain does it.

The human brain is made of billions of neurons. A simplification of a neuron is this; many signals of varying magnitude enter the nucleus, and if the total incoming signal is strong enough (it’s greater than some threshold that is specific to this neuron) the neuron will fire and output a signal. This outputted signal is one of the inputs to another neuron, and the process continues. See the figure below to visualize this:

So how can we model this mathematically? The neuron is receiving many inputs of varying magnitude, the total signal received can be modeled as:

Okay, now if the total incoming signal is greater than this neuron's threshold then the neuron fires. Or mathematically:

Before we call this a complete model of the neuron, we must address one thing. It is beneficial to get rid of the “if” statement in the above equation. We do this by passing the left side of this equation into what is called the sigmoid function. Let’s look at a graph of what we currently have versus the graph of a sigmoid function.

As you can see the sigmoid function behaves almost exactly like the step function we had before. The only difference is we don’t have to deal with that ugly if statement. The function is also differentiable now, which is useful for reasons I will explain shortly. Note that we can use any differentiable function here, just some work better than others.

Below is our final mathematical representation of a neuron.

We can now update our picture of the neuron to fit our mathematical model:

Combining multiple neurons

Now we understand the workings of one neuron, and we have modeled it mathematically. As I said before the human brain consists of billions of neurons. These billions of neurons all send and receive signals to and from other neurons, forming a neural network.

Let’s zoom back down on one neuron. This neuron receives a bunch of inputs, then sends a signal to one or more other neurons. These neurons all do the same, receive a bunch of inputs from neurons then send a signal to more neurons. A brain can be thought of as a big complicated tangled-up (gross) web of connected neurons.

When we model a neural network mathematically, we bring some order to this web. Most often, we organize the neurons in layers. Each layer receives input from the previous layer and sends output to the next layer. We make the last layer (the output layer) have a number of neurons = the number of outputs we want. Below is an example of an artificial neural network (a mathematical model of a neural network), with 2 outputs and 3 inputs:

Going back to our real estate investor's problem, the data he has on the property would be the input, and the price of the property would be the output. Recall that he had 4 total inputs and wants those inputs to tell him the price. Now, ask yourself what the number of neurons should be in the first and last layer, with 4 inputs and 1 output.

Your answer should be 4 in the first layer and 1 in the last layer. But, how many neurons should we put in between? And how many layers should we have? This is where the exact mathematics stops and trial and error comes in. We call these values hyperparameters. The optimal number of layers and the number of neurons in each layer is different for every problem, it is just something you have to test. Generally, increasing the number of layers and neurons in each later will make the neural network more robust. BUT if you have too many layers and neurons then the network will overfit to your data and simply memorize the outputs.

The fundamental idea behind artificial neural networks

So, we have a mathematical model of a neural network. How do we actually make this thing work? How do we make this network actually give us the output we are looking for, given the inputs? Do me a favor and put your brain in sponge mode for just a second and think about the very bold statement below.

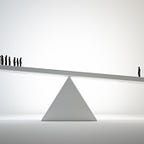

If given enough information in the inputs and given a robust enough architecture, there exists some set of weights that will make the neural network give us the output we want. Our goal is to find these weights.

We can say that for any 2 related things, there is some complicated function that models their relationship. This is the function the mathematician was trying to find by hand. The 2 related things being all the data on the property and the price that the property sold at. It turns out that if we have the correct set of weights in our neural network, we can replicate this relationship.

With some calculus and code, we can find these weights in the blink of an eye. The process of finding these weights is where neural networks and artificial neural networks take their separate paths, however. I’m not sure if it’s known how real neural networks learn, but using calculus and data we can make our artificial neural networks find the weights that will map an input to output if possible.

The article on finding these weights, or training our neural network, is posted here.

Thank you for reading! If this post helped you in some way or you have a comment or question then please leave a response below and let me know! Also, if you noticed I made a mistake somewhere, or I could’ve explained something more clearly then I would appreciate it if you’d let me know through a response.

This is a continuation of a series of articles that give an intuitive explanation of neural networks from the ground up. For the other articles see the links below:

Part 1: What is an artificial neural network

Part 2: How to train a neural network from scratch

Part 3: Full implementation of gradient descent