How to train a neural network from scratch

The intuition behind finding the weights of a neural network, with an example.

In this article, I will continue our discussion on artificial neural networks, and give an example of a very simple neural network written in python. The purpose of this series of articles I am writing is to give a full explanation of ANN’s from the ground up, with no hiding behind special libraries. Tensorflow is great for prototyping and production, but when it comes to education the only way to learn is to get a pencil and paper and get dirty in math.

In the previous article, I went over the inspiration behind an ANN and how to model the human brain mathematically (you can find this article here). Our next step is to find the correct weights for our model.

Mapping inputs to outputs

Say we have a list of inputs and their outputs. These could be anything, stock features and price, property features and sale price, student behaviors and average test scores, they could be whatever; but let’s keep things general and say we have some inputs and outputs.

Our goal is to predict the output to that last input. To do this, we must find the relationship (function) that maps these inputs to their outputs. Now for the sake of learning, I made this relationship linear. This tells us that we can use the identity activation function instead of the sigmoid, and there is only 1 layer (the output layer).

If you don’t see why a linear relationship only gives us one layer with the identity activation function; just bear with me. Let me give you a visual of our neural network and show you the function it represents before I explain.

If we had more layers that used the identity function then we could always simplify it down to the above equation. Try it yourself by adding a middle layer with 2 neurons each with the identity activation function, then write the equation that corresponds to the network and simplify it.

You could easily solve the weights here using many different methods. After all, it is just a system of linear equations. But, let’s try to solve this in the general case that we do NOT have a linear relationship.

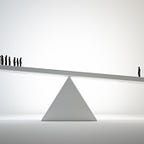

Measuring how wrong we are, using an error function

So, let’s have some fun. How could we solve for the weights without treating this as a system of linear equations? Well, you have to start somewhere, so let’s first just throw a blind guess at what they could be. I’m going to guess that W1 = .2, W2 = 1.4, and W3 = 30.

Now let’s see how good our guess was and test it on one of the inputs (using the same data in the table above).

Recall, the correct output (or expected output) should be 13.5 according to our data; we are way off! Let’s actually quantify how wrong we are by using an error function. When the NN outputs the correct value we want the error to be 0. Let’s start with; Error = correct output-output of our ANN (ex; 0 = 13.5–13.5 if our weights were correct).

We are almost there. Think practically, our error is something we want to minimize. Well, what’s the minimum of our current error function? There is no minimum! As it is, we can have -∞ error. We fix this by squaring the function, so it can not be negative, Error = (correct output-output of our ANN)². Notice the shape of this error function is just a parabola centered at 0. Now, this is the error in a single output neuron. If we have multiple outputs, then we just take the average error in all of them. So, we have;

Minimizing how wrong we are (minimizing our error)

When you change a weight by a very small amount (∂W), you get and change in error (∂E). So, if we wanted to find how much a change in this weight affects the error, we can take the ratio of the 2, ∂E/∂W. This can be recognized as the partial derivative of the error function with respect to this weight, or how a small change in this weight changes the error.

How does this help us? Well, if you are good at calculus you may know where this is headed. But, if you are not, take a look at the graph below.

This is just an arbitrary function. Take any point on this line, and put your finger on it. Now, say out loud whether the slope at this point is either positive or negative. If the slope is positive, move your finger along the line a little bit in the +X direction. If it is negative, move it in the -X direction. If the slope is 0…pick another point. Do this for a couple of points. Notice anything?

No matter what, you will always be moving your finger towards a higher value on the line.

The direction of the derivative is always the direction of ascent.

If the derivative is negative, a decrease in X will increase Y. If the derivative is positive, an increase in X will increase Y.

Back to our NN with ∂E/∂W. We want to decrease our error, so we want to move our weight in the direction of descent. We can do this by computing this derivative, then negating it. Now, we move our weight a tiny step in the direction of this negated derivative. Or;

This is just for one weight in our network. We can write this expression in matrix form, remembering that the gradient is just a vector containing all the derivatives ∂E/∂Wi. The matrix form is shown below;

Now the above equation can be repeatedly applied to the weights until they converge to the correct values. Finding this gradient is a bit of a pain if our network is larger, but it is always possible as long as we use differentiable activation functions. The repeated application of this equation is called the gradient descent algorithm.

Quick Summary

Let me summarize the past 2 pages. We have data and some outputs. We know there is some relationship between this data and the outputs. Inspired by the human brain, we can construct an artificial neural network. We initialize this neural network with random weights, then start testing it on the data and outputs we have. As we are testing, we quantify how wrong our network is by using the error function, then minimize this error function using gradient descent.

Example

Now that we have an idea about the theory behind training a neural network, we need to focus on how to implement this theory. Earlier, I gave an example of some inputs and outputs and laid out the game plan for finding their relationship.

It’s tough, almost impossible, to find the correct weights manually (without treating this as a system of linear equations). But by using the gradient descent algorithm and the error function that we derived earlier, we will do this the right way and find these weights with code.

Finding the gradient of our function

Right now, this is our neural network from input to error. Remember, for learning sake we are ignoring the bias. We will add it in our next project.

We want to find the derivative of the error with respect to each weight (how much a small change in each weight changes the error), then move that weight a tiny amount in the direction of the negated derivative (or the direction of steepest descent). We can do this using the chain rule as shown below;

That’s it, we found our gradient! Now we just need to iteratively apply the gradient descent update to make our weights converge. Here is the code to do this:

Updating the weights 2000 times is usually enough, if you guessed ridiculous weights that were in the tens of thousands or higher, it may take more. Take a look at the weights you ended up with after running this code, did you get [.5,.9,1.2]? Well, that’s the exact weights I used to generate this data.

Isn’t this incredible?! By using calculus and data we can approximate the relationship between any 2 related things! You might say, “well this is an easy example, I could have solved this in 2 minutes treating it as a system of linear equations”. Well, you’re exactly right. But what if there were 5 or 6 layers each with 1000 + neurons that each used the sigmoid activation function. Solving by hand in any way is out the window. What we need is a systematic algorithm that can find the gradient for the weights in our network, which I go over here.

Thank you for reading! If this post helped you in some way or you have a comment or question then please leave a response below and let me know! Also, if you noticed I made a mistake somewhere, or I could’ve explained something more clearly then I would appreciate it if you’d let me know through a response.

This is a continuation of a series of articles that give an intuitive explanation of neural networks from the ground up. For the other articles see the links below:

Part 1: What is an artificial neural network

Part 2: How to train a neural network from scratch

Part 3: Full implementation of gradient descent