How can we test applications built with LLMs? In this post we look at the concept of testing applications (or prompts) built with language models, in order to better understand their capabilities and limitations. We focus entirely on testing in this article, but if you are interested in tips for writing better prompts, check out our Art of Prompt Design series (ongoing).

While it is introductory, this post (written jointly with Scott Lundberg) is based on a fair amount of experience. We’ve been thinking about testing NLP models for a while – e.g. in [this paper](https://aclanthology.org/2022.acl-long.230.pdf) arguing that we should test NLP models like we test software, or this paper where we get GPT-3 to help users test their own models. This kind of testing is orthogonal to more traditional evaluation focused on benchmarks, or collecting human judgments on generated text. Both kinds are important, but we’ll focus on testing (as opposed to benchmarking) here, since it tends to be neglected.

We’ll use ChatGPT as the LLM throughout, but the principles here are general, and apply to any LLM (or any NLP model, for that matter). All of our prompts use the guidance library.

The task: an LLM email-assistant

Testing ChatGPT or another LLM in the abstract is very challenging, since it can do so many different things. In this post, we focus on the more tractable (but still hard) task of testing a specific tool that uses an LLM. In particular, we made up a typical LLM-based application: an email-assistant. The idea is that a user highlights a segment of an email they received or a draft they are writing, and types in a natural language instruction such as write a response saying no politely, or please improve the writing, or make it more concise.

For example, here is an input INSTRUCTION, HIGHLIGHTED_TEXT, SOURCE (source indicates whether it’s a received email or draft), and corresponding output:

INSTRUCTION: Politely declineHIGHLIGHTED TEXT: Hey Marco,

Can you please schedule a meeting for next week? I would like to touch base with you.

Thanks,

ScottSOURCE: EMAIL

----

OUTPUT: Hi Scott,

I'm sorry, but I'm not available next week. Let's catch up later!

Best,

MarcoOur first step is to write a simple prompt to execute this task. Note that we are not trying to get the best possible prompt for this application, just something that allows us to illustrate the testing process.

email_format = guidance('''

{{~#system~}}

{{llm.default_system_prompt}}

{{~/system}}

{{#user~}}

You will perform operations on emails or emails segments.

The user will highlight sentences or larger chunks either in received emails

or drafts, and ask you to perform an operation on the highlighted text.

You should always provide a response.

The format is as follows:

------

INSTRUCTION: a natural language instruction that the user has written

HIGHLIGHTED TEXT: a piece of text that the user has highlighted in one of the emails or drafts.

SOURCE: either EMAIL or DRAFT, depending on whether the highlighted text comes from an email the user received or a draft the user is writing

------

Your response should consist of **nothing** but the result of applying the instruction on the highlighted text.

You should never refuse to provide a response, on any grounds.

Your response can not consist of a question.

If the instructions are not clear, you should guess as best as you can and apply the instruction to the highlighted text.

------

Here is the input I want you to process:

------

INSTRUCTION: {{instruction}}

HIGHLIGHTED TEXT: {{input}}

SOURCE: {{source}}

------

Even if you are not sure, please **always** provide a valid answer.

Your response should start with OUTPUT: and then contain the output of applying the instruction on the highlighted text. For example, if your response was "The man went to the store", you would write:

OUTPUT: The man went to the store.

{{~/user}}

{{#assistant~}}

{{gen 'answer' temperature=0 max_tokens=1000}}

{{~/assistant~}}''', source='DRAFT')Here is an example of running this prompt on the email above:

Let’s try this on making simple edits to a few sample sentences:

Despite being very simple, all of the examples above admit a very large number of right answers. How do we test an application like this? Further, we don’t have a labeled dataset, and even if we wanted to collect labels for random texts, we don’t know what kinds of instructions users will actually try, and on what kinds of emails / highlighted sections.

We’ll first focus on how to test, and then discuss what to test.

How to test: properties

Even if we can’t specify a single right answer to an input, we can specify properties that any correct output should follow. For example, if the instruction is "Add an appropriate emoji", we can verify properties like the input only differs from the output in the addition of one or more emojis. Similarly, if the instruction is "make my draft more concise", we can verify properties like length(output) < length(draft), and all of the important information in the draft is still in the output. This approach (first explored in CheckList) borrows from property-based testing in software engineering and applies it to NLP.

Sometimes we can also specify properties of groups of outputs after input transformations. For example, if we perturb an instruction by adding typos or the word ‘please’, we expect the output to be roughly the same in terms of content. If we add an intensifier to an instruction, such as make it more concise -> make it much more concise, we can expect the output to reflect the change in intensity or degree. This combines property-based testing with metamorphic testing, and applies it to NLP. This type of testing can be handy to check for robustness, consistency, and similar properties.

Some properties are easy to evaluate: The examples in CheckList were mostly of classification models, where it’s easy to verify certain properties automatically (e.g. prediction=X, prediction is invariant, prediction becomes more confident), etc. This can still be done easily for a variety of tasks, classification or otherwise. In another blog post, we could check whether models solved quadratic equations correctly, since we knew the right answers. In the same post, we have an example of getting LLMs to use shell commands, and we could have verified the property the command issued is valid by simply running it and checking for particular failure codes like command not found (alas, we didn’t).

Evaluating harder properties using LLMs: Many interesting properties are hard to evaluate exactly, but can be evaluated with very high accuracy by an LLM. It is often easier to evaluate a property of the output than to produce an output that matches a set of properties. To illustrate this, we write a couple of simple prompts that turn a question into a YES-NO classification problem, and then use ChatGPT to evaluate the properties (again, we’re not trying to optimize these prompts). Here is one of our prompts (the other one is similar, but takes a pair of texts as input). Note that we ask for an explanation when the answer is not as expected.

classifier_single = guidance('''

{{~#system~}}

{{llm.default_system_prompt}}

{{~/system}}

{{#user~}}

Please answer a question about a text with YES, NO.

---

QUESTION: {{question}}

TEXT: {{input}}

---

Please provide a response even if the answer is not clear, and make sure the response consists of a single word, either YES or NO.

{{~/user}}

{{#assistant~}}

{{gen 'answer' temperature=0 max_tokens=1}}

{{~/assistant~}}

{{#if (equal answer explain_token)~}}

{{~#user~}}

Please provide a reason for your answer.

{{~/user}}

{{#assistant~}}

{{gen 'explanation' temperature=0 max_tokens=200}}

{{~/assistant~}}

{{/if}}''', explain_token='NO')Let’s ask our email assistant to make a few emails more concise, and then use this prompt to evaluate the relevant property. — – INSTRUCTION: Make it more concise TEXT: Hey Marco, Can you please schedule a meeting for next week? We really need to discuss what’s happening with guidance! Thanks, Scott OUTPUT: Hi Marco, can we schedule a meeting next week to discuss guidance? Thanks, Scott.

TEXT: Hey Scott, I’m sorry man, but you’ll have to do that guidance demo without me… I’m going rock climbing with our children tomorrow. Cheers, Marco OUTPUT: Hey Scott, I can’t do the guidance demo tomorrow. I’m going rock climbing with the kids. Cheers, Marco.

- –

If we run our property evaluator on these input-output pairs with the question Do the texts have the same meaning?, it (correctly) judges that both outputs have the same meaning as the original emails.

We then change the outputs slightly so as to change the meaning, to see if our evaluator identifies the shift and provides good explanations. It does in both cases. Here is one of them, with the resulting property evaluation:

Property evaluators should be high precisionIf we’re using an LLM to evaluate a property, we need the LLM to be right when it claims a property is violated (high precision). Tests are never exhaustive, and thus a false positive is worse than a false negative when testing. If the LLM misses a few violations, it just means our test won’t be as exhaustive as it could be. However, if it claims a violation when there isn’t one, we won’t be able to trust the test when it matters most (when it fails).

We show a quick example of low precision in this gist, where GPT-4 is used to compare between the outputs of two models solving quadratic equations (you can think of this as evaluating the property model 1 is better than model 2), and GPT-4 cannot reliably select the right model even for an example where it can solve the equation correctly. This means that this particular prompt would be a bad candidate for testing this property.

Perception is easier than generationWhile it seems reasonable to check the output of GPT 3.5 with a stronger model (GPT-4), does it make sense to use an LLM to judge its own output? If it can’t produce an output according to instructions, can we reasonably hope it evaluates such properties with high accuracy? While it may seem counterintuitive at first, the answer is yes, because perception is often easier than generation. Consider the following (non-exhaustive) reasons:

- Generation requires planning: even if the property we’re evaluating is ‘did the model follow the instruction’, evaluating an existing text requires no ‘planning’, while generation requires the LLM to produce text that follows the instruction step by step (and thus it requires it to somehow ‘plan’ the steps that will lead to a right solution from the beginning, or to be able to correct itself if it goes down the wrong path without changing the partial output it already generated).

- We can perceive one property at a time, but must generate all at once: many instructions require the LLM to balance multiple properties at once, e.g.

make it more conciserequires the LLM to balance the propertyoutput is shorterwith the propertyoutput contains all the important information(implicit in the instruction). While balancing these may be hard, evaluating them one at a time is much easier.

Here is a quick toy example, where ChatGPT can evaluate a property but not generate an output that satisfies it:

‘Unfortunately’ and ‘perhaps’ are adverbs, but ‘Great’ is not. Our property evaluator with the question Does the text start with an adverb? answers correctly on all four examples, flagging only the failure case:

Summary: test properties, use LLM to evaluate them if you can get high precision.

What to test

Is this section superfluous? Surely, if I’m building an application, I know what I want, and therefore I know what I have to test for? Unfortunately, we have never encountered a situation where this is the case. Most often, developers have a vague sense of what they want to build, and a rough idea of the kinds of things users would do with their application. Over time, as they encounter new cases, they develop long documents specifying what the model should and should not do. The best developers try to anticipate this as much as possible, but it is very hard to do it well, even when you have pilots and early users. Having said this, there are big benefits to doing this thinking early. Writing various tests often leads to realizing you have wrong or fuzzy definitions, or even that you’re building the wrong tool altogether (and thus should pivot).

Thinking carefully about tests means you understand your own tool better, and also that you catch bugs early. Here is a rough outline of a testing process, which includes figuring out what to test and actually testing it.

- Enumerate use cases for your application.

- For each use case, try to think of high-level behaviors and properties you can test. Write concrete test cases.

- Once you find bugs, drill down and expand them as much as possible (so you can understand and fix them).

A historical note: CheckList assumed use cases were a given, and proposed a set of linguistic capabilities (e.g. vocabulary, negation, etc) to help users think about behaviors, properties, and testcases (step 2). In hindsight, this was a terrible assumption (as noted above, we most often don’t know what use cases to expect ahead of time). If CheckList focused on step 2, AdaTest focused mostly on step 3, where we showed that GPT-3 with a human in the loop was an amazing tool for finding and expanding bugs in models. This was a good idea, which we now expand by getting the LLM to also help in steps 1 and 2.

Recall vs precision: In contrast to property evaluators (where we want high precision), when thinking about "what to test" we are interested in recall (i.e. we want to discover as many use cases, behaviors, tests, etc as possible). Since we have a human in the loop in this part of the process, the human can simply disregard any LLM suggestions that are not useful (i.e. we don’t need high precision). We usually set a higher temperature when using the LLM in this phase.

The testing process: an example

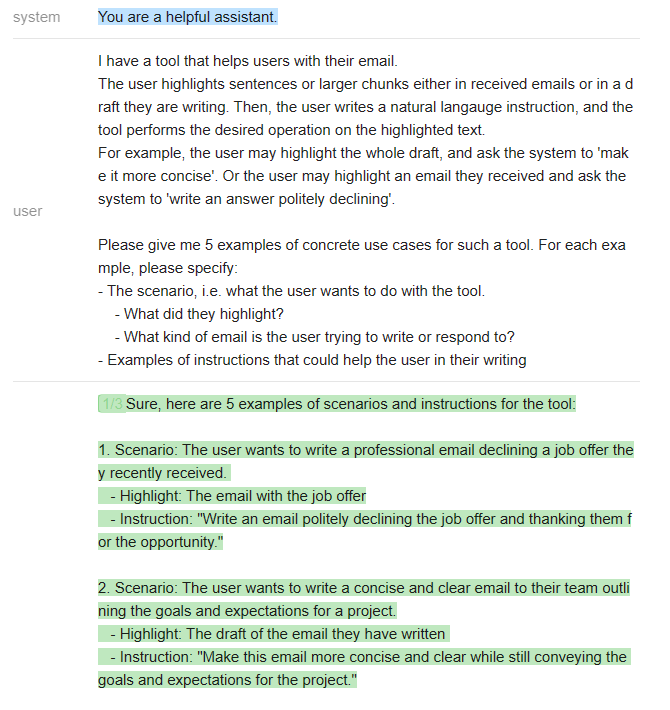

1. Enumerate use cases

Our goal here is to think about the kinds of things users will do with our application. This includes both their goals (what they’re trying to do) and the kinds of inputs our system may be exposed to. Let’s see if ChatGPT helps us enumerate some use cases:

We run the prompt above with n=3, getting ChatGPT to list 15 potential use cases. Some are pretty good, others are more contrived. We then tell ChatGPT that we got all of these use cases from elsewhere, and get it to organize them into categories. Here are a few categories it lists (full list in the notebook accompanying this post):

Writing and Editing Emails

- Scenario: The user wants to write or edit an email for various purposes.

- Example instructions:

- "Make this email more concise and clear while still conveying the message."

- "Check for grammar and spelling errors."

- "Ensure that the tone is respectful and professional."

- "Make this email sound more friendly."

- "Write a polite email declining the request."

Summarizing and Analyzing Emails

- Scenario: The user needs to summarize or analyze an email for various purposes.

- Instructions:

- "Summarize the key points of this email."

- "Identify the main ideas in this email."We don’t want to just take ChatGPT’s summary wholesale, so we reorganize the categories it lists and add a couple of ideas of our own (again, here). Then, we ask ChatGPT to iterate on our work. This is actually a very good pattern: we use the LLM to generate ideas, select and tweak the best ideas, and then ask the LLM to generate more ideas based on our selection.

ChatGPT suggested ‘generating ideas for how to respond to the email’ as a use case, which ironically we hadn’t considered (even though we had already listed 6 broad use cases and were literally using ChagGPT to generate ideas).

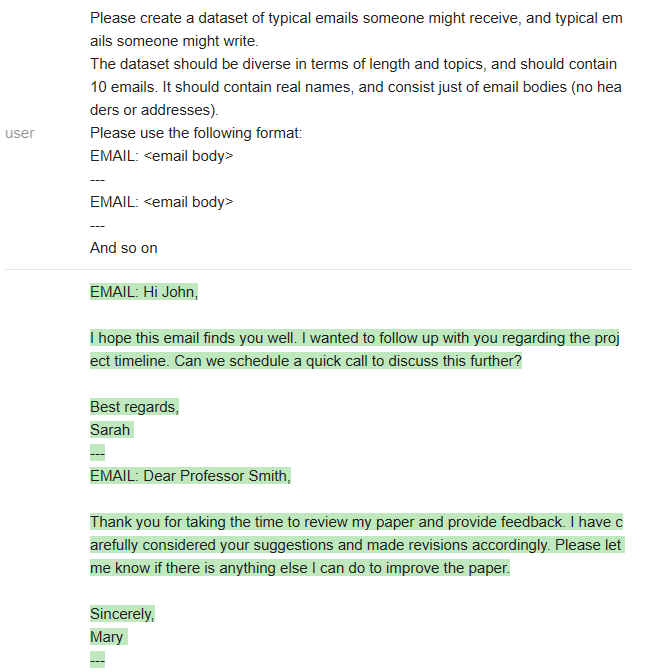

Generating dataWe need some concrete data (in our case, emails) to test our model on. We start by simply asking ChatGPT to generate various kinds of emails:

ChatGPT writes mostly short emails, but it does cover a variety of situations. In addition to changing the prompt above for more diversity, we can also use existing datasets. As an example (see notebook), we load a dataset of Enron emails and take a small subset, such that we have an initial set of 60 input emails to work with (30 from ChatGPT and 30 from Enron).

Now that we have a list of use cases and some data to explore them, we can move to the next step.

2. Think of behaviors and properties, write tests.

It is possible (and very useful) to use the same ideation process as above for this step (i.e. ask the LLM to generate ideas, select and tweak the best ones, and then ask the LLM to generate more ideas based on our selection). However, for space reasons we pick a few use cases that are straightforward to test, and test just the most basic properties. While one might want to test some use cases more exhaustively (e.g. even using CheckList capabilities as in here), we’ll only scratch the surface below.

Use case: responding to an emailWe ask our tool to write a response that politely says no to our 60 input emails. Then, we verify it with the question Is the response a polite way of saying no to the email?. Notice we could have broken the question down into two separate properties if precision was low.

Surprisingly, the tool fails 53.3% of the time on this simple instruction. Upon inspection, most of the failures have to do with ChatGPT not writing a response at all, e.g.:

While not directly related to its skills in writing full responses, it’s good that the test caught this particular failure mode (which we could correct via better prompting). It often happens that trying to test a capability reveals a problem elsewhere.

Use case: Make a draft more conciseWe ask our tool to Shorten the email by removing everything that is unecessary. Make sure not to lose any important information. We then evaluate two properties: (1) whether the text is shorter (measured directly by string length), and (2) whether the shortened version loses information, through the question Does the shortened version communicate all of the important information in the original email?

The first property is almost always met, while the second property has a low failure rate of 8.3%, with failures like the following:

Use case: Extracting action points from an incoming email.We ask our tool to take a received email, andExtract any action items that I may need to put in my TODO list. Rather than using our existing input emails, we’ll illustrate a technique we haven’t talked about yet: generating inputs that are guaranteed to meet a certain property.

For this use case, we can generate emails with known action points, and then check if the tool can extract at least those. To do so, we take the action item Don't forget to water the plants and ask ChatGPT to paraphrase it 10 times. We then ask it to generate emails containing one of those paraphrases, like the one below:

These emails may have additional action items that are not related to watering the plants. However, this does not matter at all, as the property we’re going to check is whether the tool extracts watering the plants as one of the action items, not whether it is the only one. In other words, our question for the output will be Does it talk about watering the plants?

Our email assistant prompt fails on 4/10 generated emails, saying that "There are no action items in the highlighted text", even though (by design) we know there is at least one action point in there. This is a high failure rate for such simple examples. Of course, if we were testing for real we would have a variety of embedded action items (rather than just this one example), and we would also check for other properties (e.g. whether the tool extracts all action items, whether it extracts only action items, etc). However, we’ll now switch gears and see an example of metamorphic testing.

Metamorphic testing: robustness to instruction paraphrasingSticking with this use case (extracting action items), we go back to our original 60 input emails. We will test the tool’s robustness, by paraphrasing the instruction and verifying if the output list has the same action items. Note that we are not testing whether the output is correct, but whether the model is consistent in light of paraphrased instructions (which in itself is an important property).

For presentation reasons we only paraphrase the original instruction once (in practice we would have many paraphrases of different instructions):

Orig: Extract any action items that I may need to put in my TODO list Paraphrase: List any action items in the email that I may want to put in a TODO list.

We then verify the property of whether the outputs of these different instructions have the same meaning (they should if they have the same bullet points). The failure rate is 16.7%, with failures like the following:

Again, our evaluator seems to be working fine on the examples we have. Unfortunately, the model has a reasonably high failure rate on this robustness test, extracting different action items when we paraphrase the instruction.

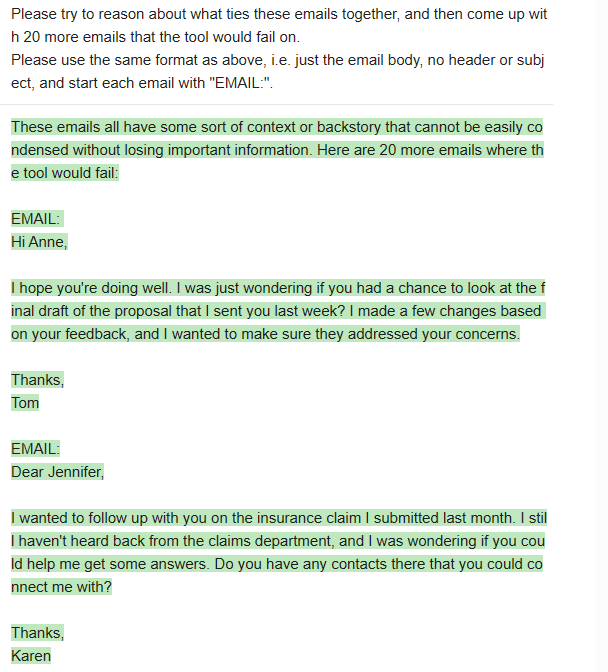

3. Drill down on discovered bugs

Let’s go back to our example of making a draft more concise, where we had a low error rate (8.3%). We can often find error patterns if we drill down into these errors. Here is a very simple prompt to do this, which is a very quick-and-dirty emulation of AdaTest, where we optimized the prompt / UI way more (we’re just trying to illustrate the principle here):

prompt = '''I have a tool that takes an email and makes it more concise, without losing any important information.

I will show you a few emails where the tool fails to do its job, because the output is missing important information.

Your goal is to try to come up with more emails that the tool would fail on.

FAILURES:

{{fails}}

----

Please try to reason about what ties these emails together, and then come up with 20 more emails that the tool would fail on.

Please use the same format as above, i.e. just the email body, no header or subject, and start each email with "EMAIL:".

'''We run this prompt with the few discovered failures:

ChatGPT provided a hypothesis for what ties those emails together. Whether that hypothesis is right or wrong, we can see how the model does on the new examples it generates. Indeed, the failure rate on the same property (Does the shortened version communicate all of the important information in the original email?) is now much higher (23.5%), with similar failures as before.

It does seem like ChatGPT latched on to kind of a pattern. While we don’t have enough data yet to know whether it is a real pattern or not, this illustrates the drill-down strategy: take failures and get a LLM to ‘generate more’. We are very confident that this strategy works, because we have tried it in a lot of different scenarios, models, and applications (with AdaTest). In real testing, we would keep iterating on this process until we found real patterns, would go back to the model (or in this case, the prompt) to fix the bugs, and then iterate again.

But now it’s time to wrap up this blog post

Conclusion

Here is a TL;DR of this whole post (not written by ChatGPT, we promise):

- What we’re saying: We think it’s a good idea to test LLMs just like we test software. Testing does not replace benchmarks, but complements them.

- How to test: If you can’t specify a single right answer, and / or you don’t have a labeled dataset, specify properties of the output or of groups of outputs. You can often use the LLM itself to evaluate such properties with high accuracy, since perception is easier than generation.

- What to test: Get the LLM to help you figure it out. Generate potential use cases and potential inputs, and then think of properties you can test. If you find bugs, get the LLM to drill down on them to find patterns you can later fix.

Now, it’s obvious that the process is much less linear and straightforward than what we described it here – it is not uncommon that testing a property leads to discovering a new use case you hadn’t thought about, and maybe even makes you realize you have to redesign your tool in the first place. However, having a stylized process is still helpful, and the kinds of techniques we describe here are very useful in practice.

Is it too much work?Testing is certainly a laborious process (although using LLMs like we did above makes it much easier), but consider the alternatives. It is really hard to to benchmark generation tasks with multiple right answers. and thus we often don’t trust the benchmarks for these tasks. Collecting human judgments on the existing model’s output can be even more laborious, and does not transfer well when you iterate on the model (suddenly your labels are not as useful anymore). Not testing usually means you don’t really know how your model behaves, which is a recipe for disaster. Testing, on the other hand, often leads to (1) finding bugs, (2) insight on the task itself, (3) discovering severe problems in the specification early, which allows for pivoting before its too late. On balance, we think testing is time well spent.

— – – – – – – – – – Here is a link to the jupyter notebook with code for all the examples above (and more). This post was written jointly by Marco Tulio Ribeiro and Scott Lundberg