MLOps Specialization Series

For all the hype around machine learning models, they are not useful unless deployed into production to deliver business value.

Andrew Ng and DeepLearning.AI deeply understood this, and created the MLOps specialization to share their practical experiences on productionized ML systems.

In this article, I summarize the lessons so that you can skip the hours of online videos while still being able to glean the key insights.

Contents

(1) Overview of Course 1 (2) Key Lessons (3) PDF Lecture Notes

This summary article covers Course 1 of the 4-course MLOps specialization. Follow this page to stay updated with content from subsequent courses.

Overview of Course 1

The first course of the MLOps Specialization is titled Introduction to Machine Learning in Production, and gives an introduction to the development, deployment, and continuous improvement of an end-to-end productionized ML system.

The course is organized around the components of a ML project lifecycle, which I found to be a useful framework for structuring ML initiatives.

Key Lessons

In the spirit of the course’s emphasis on practical application, I will be sharing key takeaways focused on pragmatic advice.

Part 1 – Scoping

Start with the right questions and focus

- Begin by first identifying the most valuable business problems (and not AI problems or solutions) together with the business owners.

- A good question to initiate discussions is "What are the top 3 things you wish were working better?".

- Evaluate a project’s technical feasibility by **** referring to external benchmarks (e.g. literature, competitors), assessing human level performance (for unstructured data), or seeing if there are appropriate features which are predictive of the outcome (for structured data).

- Technical and business teams should agree on a set of common metrics, as ML teams tend to overly focus on technical metrics (e.g. F1 score) while stakeholders focus on business metrics (e.g. revenue).

Part 2 – Data

Different practices for different data problems

- Data problems are categorized based on the size and type of data:

- Unstructured data: Use data augmentation along with human labelling to get more training data, as it is easy to generate data like audio or images.

- Structured data: It is difficult to create more tabular data with data augmentation, so focus should be on the quality of data and labels.

- Small datasets: Ensure examples are labelled in a clean and consistent manner by manually reviewing them, because clean labels have a huge impact on model performance for small data.

- Big datasets: Impossible to review the labels manually, so emphasis should be on setting up well-defined, scalable data processes.

- Seek advice from people who have worked in the same data quadrant as your ML problem, because different scenarios have different practices.

Improving labelling consistency

- Labelling consistency is also important for big datasets as they may comprise rare events that must be accurately labelled for good model performance e.g. rare diagnoses amidst a large dataset of X-ray images.

- To establish labelling standards, get multiple labelers to label the same examples. Where there are disagreements, gather the team (along with a subject matter expert) to iron out these discrepancies.

- Remember to write down and document these agreed upon definitions.

- Goal is to have standardized definitions and practices such that subsequent labelling tasks are done in a clean and consistent manner.

- Improving labelling consistency is an iterative process, so consider repeating the process until disagreements are resolved as far as possible.

Part 3 – Modelling

Data centric instead of Model centric

- Traditionally, the focus has been on tweaking and improving the model code while keeping the dataset fixed.

- For practical projects, it is actually more useful to adopt a data-centric approach, where you focus on feeding the model with high-quality data while holding the model fixed.

- Often a reasonable algorithm with good data will do just fine, and may even outperform a great algorithm fed with not so good data.

- Aim to work towards a practical system that works, so start off with basic established models (e.g. from existing open-source projects) instead of obsessing over the latest state-of-the-art algorithms.

Good average performance is not good enough

- A ML system will not be acceptable for deployment if its performance on a set of disproportionately important examples is poor, even if it has good test set performance on average.

- Assess model performance separately on key slices of data to ensure there is no discrimination based on particular features (e.g. ethnicity, gender, location), and that the model also performs well on rare cases.

Part 4 – Deployment

Deployment patterns

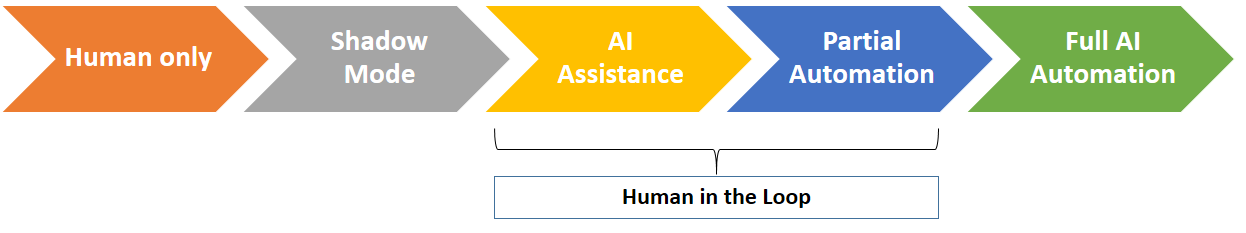

- Deployment should not be framed as a binary outcome (i.e. deploy or not deploy), but rather as a spectrum of varying degrees of automation.

- The degree of automation to use is highly dependent on the use case, model performance and comfort level of the business.

- The end goal need not be full automation. For instance, partial automation with a human in the loop can be an ideal design for AI-based interpretation of medical scans , with human judgement coming in for cases where prediction confidence is low.

- Common ways to deploy ML systems include:

- Shadow Mode Deployment: The ML model runs in parallel with the existing human workflow, as a means of verifying its performance before letting it replace humans in making real decisions on the ground.

- Canary Deployment: Roll out the ML model on a small fraction (e.g. 5%) of the traffic/data initially, and then ramping it up gradually with close performance monitoring.

- Blue-Green Deployment: Replace (gradually or immediately) an existing old (blue) ML model by rerouting traffic/data to the new (green) model.

System Monitoring

- After deploying a ML system for the first time, you are actually only halfway to the finish line as ** there is still plenty of work to do. This includes system monitoring, model updating and retraining, and handling data changes such as concept drift and data drif**t.

- For monitoring, brainstorm the things that could possibly go wrong, and come up with several metrics to monitor (ideally in a dashboard).

- These can be organized in 3 broad categories i.e. Input metrics (e.g. no. of missing values), output metrics (e.g. no. of null output responses), and software metrics (e.g. server load, latency).

- After selecting a set of metrics to monitor, a common practice would be to set thresholds for alarms.

Lecture Notes

As a token of appreciation, here’s the link to the GitHub repo with the pdf lecture notes I compiled based on the slides and transcripts. To stay updated with new releases of compilation notes from subsequent courses, please feel free to star the repo as well.

Conclusion

At the end of the day, it is important to remember that ML model code is only a small part (~5–10%) of a successful ML system, and the objective should be to create value by placing ML models into production.

I hope these takeaways have given you some fresh perspectives and valuable insights, regardless of whether you have had experience with ML production or not.

These learning points are just a subset of the many nuggets of practical advice, so do check out the course notes and videos.

I welcome you to join me on a Data Science learning journey! Give this Medium page a follow to stay in the loop of more data science content (and subsequent MLOps notes released), or reach out to me on LinkedIn. Have fun learning about ML production systems!

Ready for more?

Continue to Part 2 right here!