Introduction

The Time magazine selected Greta Thunberg, with all her 16 years of vigor and a laser-focused mission for saving our planet, as its ‘Person of the Year’, for 2019. Greta has been speaking, courageously, all around the world, about the impending danger (some even call it an existential crisis) that we humans, as a species, face collectively in the form of climate change and environmental damage, accelerated primarily due to human activities.

To understand the impact of such an epoch-changing global event, we need tons of scientific data, high-fidelity visualization capability, and robust predictive models.

Weather prediction and climate modeling, therefore, are at the forefront of humanity’s fight against climate change. But, they are not easy enterprises. At the very least, for all the advancement in Big Data analytics and scientific simulation capabilities, these kinds of Earth-scale problems are simply intractable for present-day hardware and software stacks.

Can we call on the power of Artificial Intelligence, and in particular, that of deep neural networks, to aid us in this enterprise?

To understand the impact of such an epoch-changing global event, we need tons of scientific data, high-fidelity visualization capability, and robust predictive models.

Deep learning speeds up this complex task

The WRF model

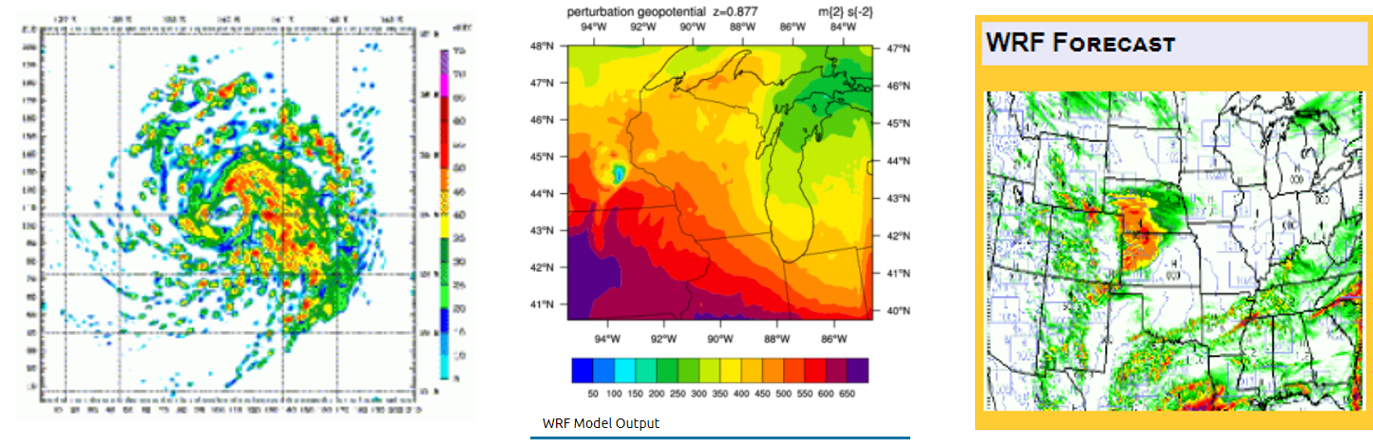

In the United States, the majority of weather prediction services are based on a comprehensive mesoscale model called Weather Research and Forecasting (WRF).

The model serves a wide range of meteorological applications across scales from tens of meters to thousands of kilometers. As Jiali Wang, an environmental scientist at the U.S. Department of Energy’s (DOE) Argonne National Laboratory, puts it succinctly – "It describes everything you see outside of your window, from the clouds to the sun’s radiation, to snow to vegetation – even the way skyscrapers disrupt the wind."

The effort to develop WRF began in the latter part of the 1990s and was a collaborative partnership principally among various Government agencies and universities – National Center for Atmospheric Research (NCAR), the National Oceanic and Atmospheric Administration (NOAA), the Air Force Weather Agency (AFWA), the Naval Research Laboratory (NRL), the University of Oklahoma (OU), and the Federal Aviation Administration (FAA). The bulk of the work on the model has been performed or supported by NCAR, NOAA, and AFWA.

It has grown to have a large worldwide community of users (over 30,000 registered users in over 150 countries), supported by workshops and tutorials, which are held year-round. In fact, WRF is used extensively for research and real-time forecasting throughout the world. It features two dynamical cores, a data assimilation system, and a software architecture supporting parallel computation and system extensibility.

In the United States, the majority of weather prediction services are based on a comprehensive mesoscale model called Weather Research and Forecasting.

Marriage of myriad variables

Perhaps it is not surprising that such a comprehensive model has to deal with a myriad of weather-related variables and their highly complicated inter-relationships. It also turns out to be impossible to describe these complex relationships with a set of unified, analytic equations. Instead, scientists try to approximate the equations using a method called parameterization in which they model the relationships at a scale greater than that of the actual phenomena.

Although in many situations, this method yields sufficient predictive accuracy, it comes at a significant cost of computational complexity. Scaling, therefore, becomes a liability.

Can the magical power of deep learning tackle this problem? Environmental and computational scientists from Argonne National Lab are certainly hopeful. They are collaborating to use deep neural networks (DNN) to replace the parameterizations of certain physical schemes in the WRF model, hoping to significantly reduce simulation time without compromising the fidelity and predictive power.

Although in many situations, this method yields sufficient predictive accuracy, it comes at a significant cost of computational complexity.

Deep learning replaces complex physics-based models

The team at Argonne used 20 years of physics-based, model-generated data from the WRF to train the neural networks and two years of data to evaluate whether the trained deep-learning models could provide a sufficiently accurate alternative to the physics-based parameterizations.

If successful, this will enable them to create a dense grid (imagine it as a three-dimensional map of the Earth and the atmosphere) over which quantities of interest, such as wind speed or humidity, can be computed. The higher the density of such a map, the higher the quality of visualization and the possibility of more locality-specific accurate prediction. Only fast computation of such a dense grid i.e. results from a mathematical DNN model, not from a detailed simulation model, can make this dream a reality.

In particular, scientists were trying to focus their study on the so-called Planetary boundary layer (PBL), or the lowest part of the atmosphere, that human activity affects the most. It extends only a few hundred meters above the Earth’s surface and the meteorological dynamics in this layer, such as wind velocity, temperature, and humidity profiles, are critical in determining many of the physical processes in the rest of the atmosphere and on Earth.

They are collaborating to use deep neural networks (DNN) to replace the parameterizations of certain physical schemes in the WRF model, hoping to significantly reduce simulation time without compromising the fidelity and predictive power.

Two streams of data, totaling in 10,000 data points and spanning over 8 days of time-period, one from Kansas, another from Alaska, were fed into the DNNs. It was shown that DNNs, that have been trained on the inter-relation of the input and output variables, can successfully simulate wind velocities, temperature, and water vapor over time.

The results also show that a trained DNN from one location can predict behavior across nearby locations with correlations higher than 90%. That means that if we have large quantities of high-quality data available for Kansas, we can hope to speed up the weather prediction activity for Missouri too, even if not much data is collected in that state.

If successful, this will enable them to create a dense grid over which quantities of interest, such as wind speed or humidity, can be computed with high-fidelity and reasonable accuracy.

A petabyte-sized playground for deep learning

The appetite of DNNs for data is never satiated. And they have plenty to munch on for this kind of weather modeling study. Argonne Leadership Computing Facility (ALCF) is part of the Department of Energy (DOE) Office of Science user facility, where massive supercomputers are always humming to produce meteorological data from complex simulations. Add the high-performance computing (HPC) resources of the National Energy Research Scientific Computing Center at Lawrence Berkeley National Laboratory, and you can get close to a petabyte of data describing 300 years of weather data for North America to feed to the DNNs.

A trained DNN from one location can predict behavior across nearby locations with correlations higher than 90%.

Summary

Climate change is upon us, perhaps manifesting itself as the biggest existential crisis that humankind has ever faced. On the other hand, transformative technologies like artificial intelligence, coupled with Cloud and Big Data analytics, are promising unimaginable wealth and prosperity for the human race.

Can we use the later to solve the former? This article describes a thoughtful scientific effort towards that mission. There are many such efforts, captured as a summary in this article.

If you have any questions or ideas to share, please contact the author at tirthajyoti[AT]gmail.com. Also, you can check the author’s GitHub repositories for code, ideas, and resources in Machine Learning and data science. If you are, like me, passionate about AI/machine learning/data science, please feel free to add me on LinkedIn or follow me on Twitter.

Tirthajyoti Sarkar – Sr. Principal Engineer – Semiconductor, AI, Machine Learning – ON…