…in under 5 Minutes

If you’re reading this, then you probably know what you’re looking for

Most Image Segmentation tutorials online use pre-processed and labeled datasets with both ground truth images and masks generated. This is hardly ever the case in real projects when you want to work on a similar task. I’ve faced this same issue and spent COUNTLESS hours trying to find a simple enough and COMPLETE example while working on an Instance Segmentation project. I couldn’t and hence decided to write my own

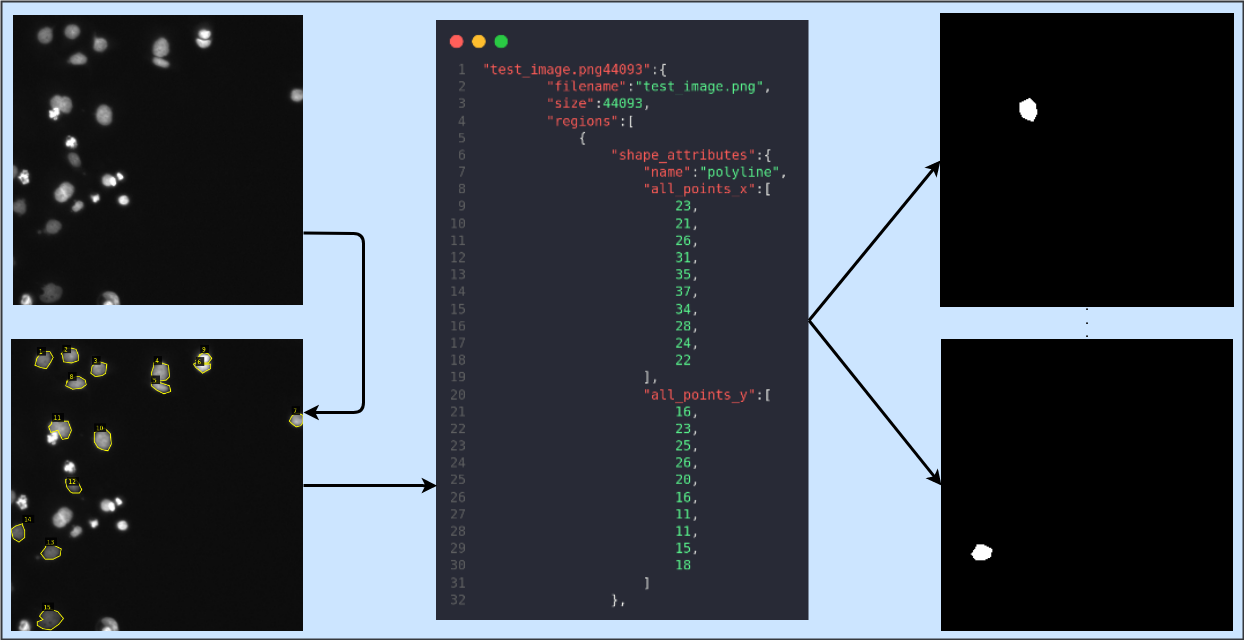

Here’s a simple visualization of what we’ll be doing in this article

VGG Image Annotator (VIA)

VIA is an extremely light annotator with support for both images and videos. You can go through the project’s home page to know more. While using VIA, you have two options: either V2 or V3. I’ll try to explain the differences below:

- V2 is much older but adequate for basic tasks and has a simple interface

- Unlike V2, V3 supports video and audio annotator

- V2 is preferable if your goal is image segmentation with multiple export options like JSON and CSV

- V2 projects are not compatible with V3 projects

I’ll be using V2 for this article. You can download the required files [here](https://www.robots.ox.ac.uk/~vgg/software/via/via_demo.html). Or, if you want to try out VIA online, you can do so here.

How-To

I’ll be using the Kaggle Nuclei Dataset and annotate one of the test images to generate a segmentation mask. Full disclosure, I am NOT a certified Medical Professional, and the annotations I’m making are just for this article’s sake. You can quickly adapt the process to other types of objects as well.

The root folder tree is given below. via.html is the file which we will use to annotate our images. It’s located in the VIA V2 ZIP download link provided above. Place all pictures which are to be annotated in the images folder. maskGen.py is a script to convert the annotations to masks.

├── images

│ └── test_image.png

├── maskGen.py

└── via.html

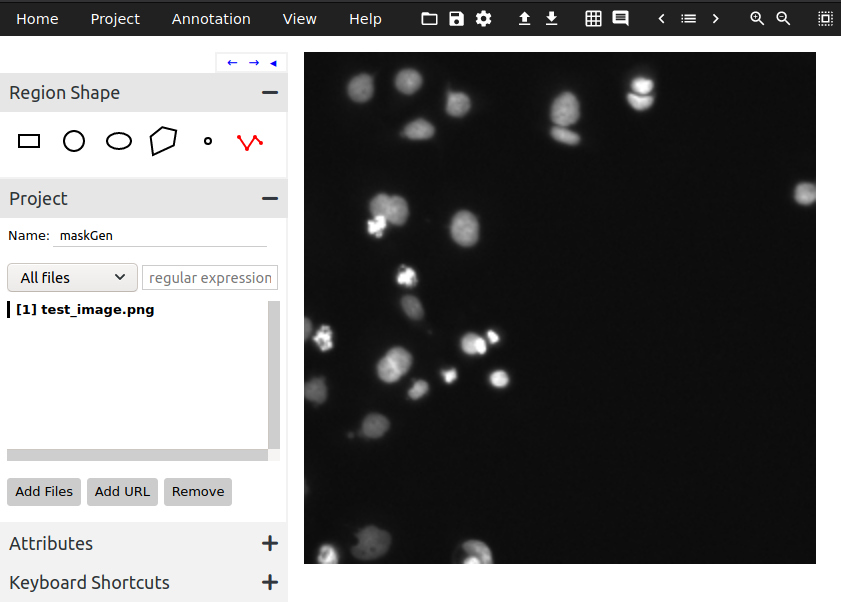

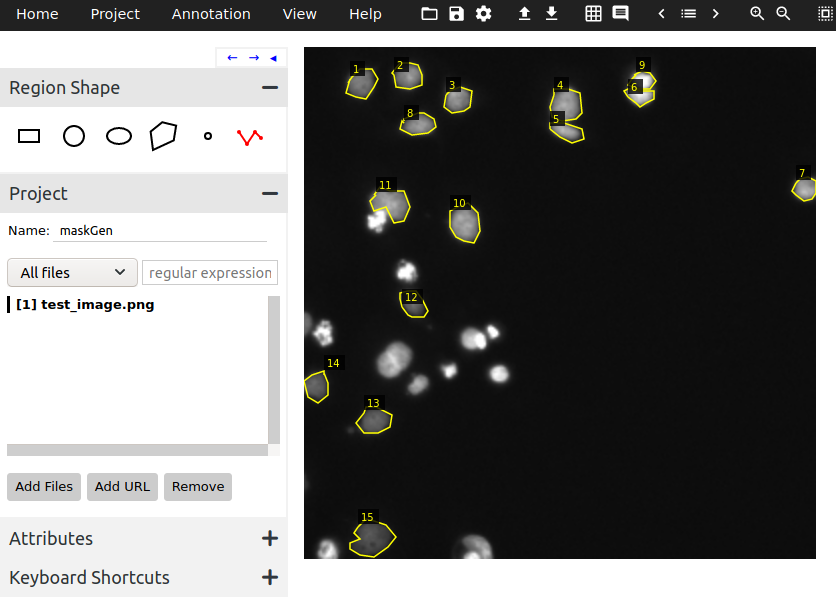

- Open

via.html: It will open in your default browser. Under Region Shape, select the Poly-line tool (last option) and give your project a name. Then click on Add Files and select all images that you want to annotate. At this point, your screen should look like Figure 1. - Start Annotating: Click on the border of an object and draw a polygon around the object. You can finish the polygon by pressing Enter, or if you’ve made an error, press Backspace. Repeat this for all objects. After you’re done, your screen should look like Figure 2.

- Export Annotations: After you’re done, click on the Annotation tab on the top and select Export Annotations (as JSON). A JSON file will be saved to your device. Locate this file and transfer it to the root folder as per the tree given above.

- Generating Masks: Now, your root folder should look something like this.

├── images

│ └── test_image.png

├── maskGen_json.json

├── maskGen.py

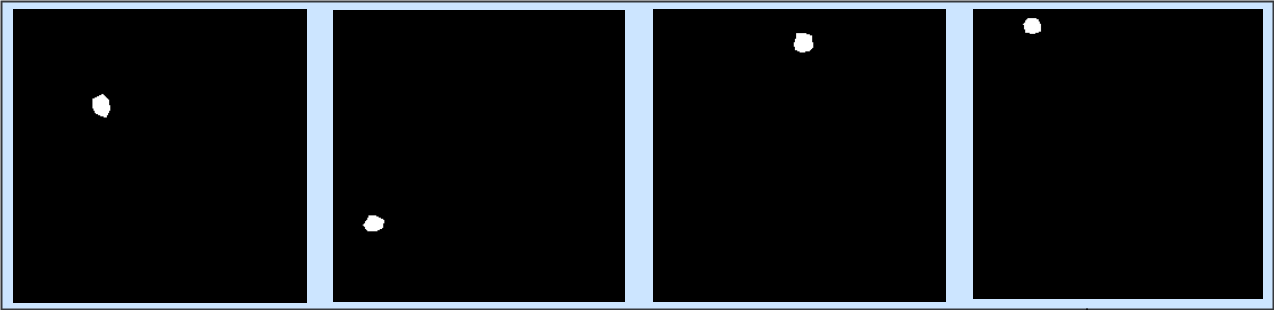

└── via.htmlmaskGen.pyis given in the gist below. It reads the JSON file, remembers the polygon coordinates for each mask object, generates masks, and saves them in .png format. For each image in the images folder, the script creates a new folder with the name of the image, and this folder contains sub-folders of both the original image and generated mask files. Make sure to update the _jsonpath variable to your JSON file’s name and set the mask height and width. A few of the generated masks are shown after the gist.

If you’ve done everything right, your end root folder tree should look something like this. The number of files in each mask folder corresponds to the number of objects you’ve annotated in the ground truth image.

├── images

│ └── test_image

│ ├── images

│ │ └── test_image.png

│ └── masks

│ ├── test_image_10.png

│ ├── test_image_11.png

│ ├── test_image_12.png

│ ├── test_image_13.png

│ ├── test_image_14.png

│ ├── test_image_15.png

│ ├── test_image_1.png

│ ├── test_image_2.png

│ ├── test_image_3.png

│ ├── test_image_4.png

│ ├── test_image_5.png

│ ├── test_image_6.png

│ ├── test_image_7.png

│ ├── test_image_8.png

│ └── test_image_9.png

├── maskGen_json.json

├── maskGen.py

└── via.html

Conclusion

I hope this article helps you in your projects. Please do reach out for any suggestions/clarifications

You can reach me on: Email, LinkedIn, GitHub

by

by