Demo

Here is a demo of the system.

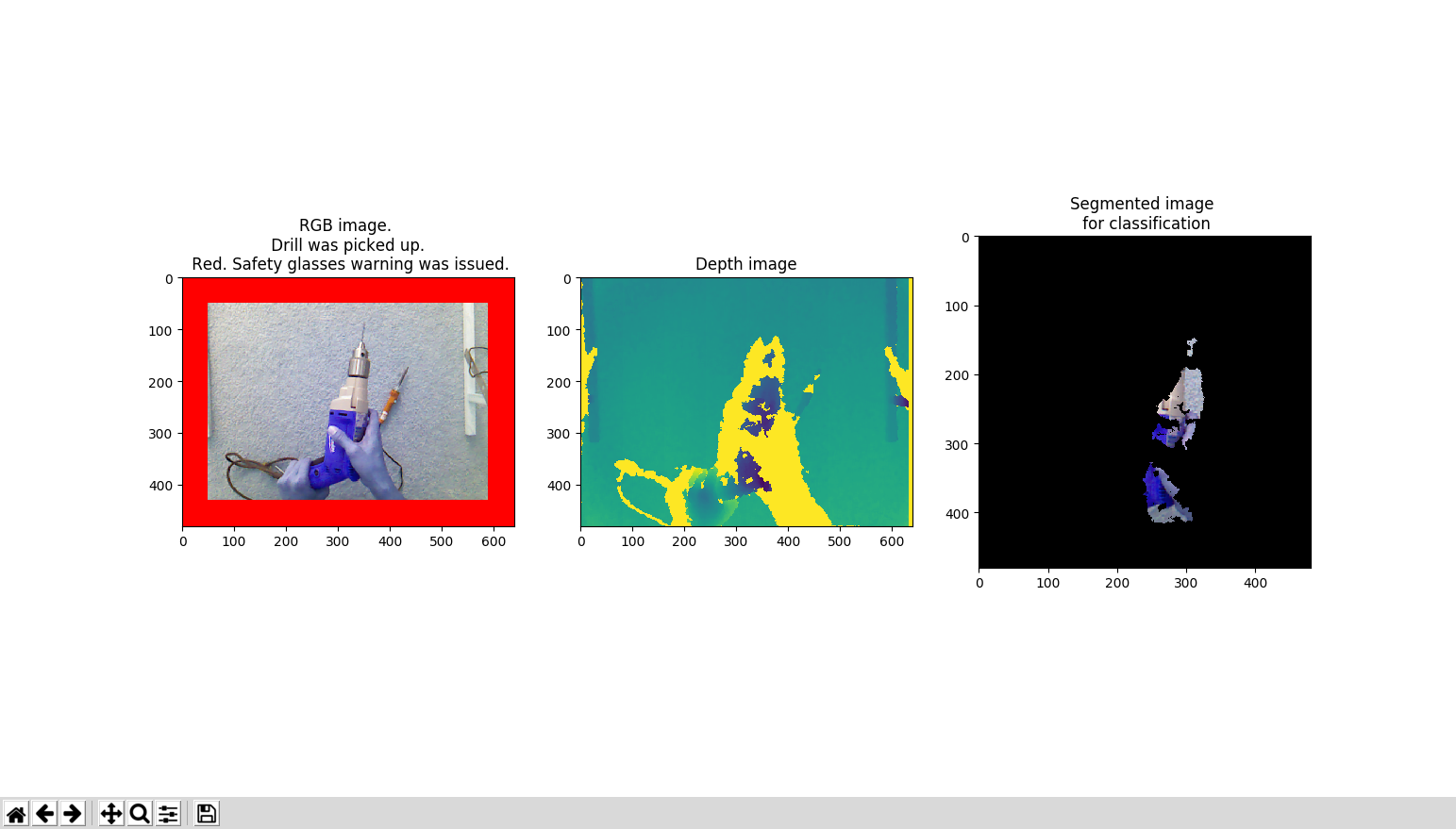

When the system detects that a drill is picked up, it will automatically issue a safety glasses warning. To represent the presence of the safety glasses warnings, the border of the RGB image is colored red in the demo video.

When the system detects that no drill is picked up, it will not issue any safety glasses warnings. To represent the absence of the safety glasses warnings, the border of the RGB image is colored green in the demo video.

As shown in the demo video, the computer vision system successfully detects whether the operator picks up a drill.

Hardware

I use wood (from Home Depot) to form a support structure. I then mount a Microsoft XBOX 360 Kinect Sensor (from Amazon) on the support structure to monitor the activity on the ground.

Segmentation

An example consisting of an RGB image, a depth image and an image of the extracted object is shown below.

It is challenging for a computer vision algorithm to determine whether the hand of the operator is holding a drill from the RGB image alone. However, with the depth information, the problem is easier.

My segmentation algorithm sets the color of a pixel on the RGB image to black if its corresponding depth is outside a predefined range. This enables me to segment the object that is picked up.

Classification

I collect data by videotaping myself holding a drill/waving hands separately.

I then use the technique of transfer learning to tune a VGG neural network that is pre-trained using ImageNet. But the result is not good. Perhaps the extracted images are not similar to the natural images in ImageNet.

Therefore, I train a convolutional neutral network using the extracted images from scratch. The result is pretty good. The accuracy of the classifier is ~95% on the validation set.

A snippet of the model is shown below.

from keras.preprocessing.image import ImageDataGenerator

from keras.models import Sequential

from keras.layers import Conv2D, MaxPooling2D

from keras.layers import Activation, Dropout, Flatten, Dense

from keras import backend as K# dimensions of our images.

img_width, img_height = 120, 120train_data_dir = '/home/kakitone/Desktop/kinect/data/train4/'

validation_data_dir = '/home/kakitone/Desktop/kinect/data/test4/'

nb_train_samples = 2000

nb_validation_samples = 1000

epochs = 5

batch_size = 8if K.image_data_format() == 'channels_first':

input_shape = (3, img_width, img_height)

else:

input_shape = (img_width, img_height, 3)model = Sequential()

model.add(Conv2D(4, (3, 3), input_shape=input_shape))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))model.add(Conv2D(8, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))model.add(Conv2D(16, (3, 3)))

model.add(Activation('relu'))

model.add(MaxPooling2D(pool_size=(2, 2)))model.add(Flatten())

model.add(Dense(16))

model.add(Activation('relu'))

model.add(Dense(1))

model.add(Activation('sigmoid'))model.compile(loss='binary_crossentropy',

optimizer='rmsprop',

metrics=['accuracy'])# this is the augmentation configuration we will use for training

train_datagen = ImageDataGenerator(

rescale=1. / 255,

shear_range=0.2,

zoom_range=0.2,

horizontal_flip=True)# this is the augmentation configuration we will use for testing:

# only rescaling

test_datagen = ImageDataGenerator(rescale=1. / 255)train_generator = train_datagen.flow_from_directory(

train_data_dir,

target_size=(img_width, img_height),

batch_size=batch_size,

class_mode='binary')validation_generator = test_datagen.flow_from_directory(

validation_data_dir,

target_size=(img_width, img_height),

batch_size=batch_size,

class_mode='binary')model.fit_generator(

train_generator,

steps_per_epoch=nb_train_samples // batch_size,

epochs=epochs,

validation_data=validation_generator,

validation_steps=nb_validation_samples // batch_size)Final words

2000

Every day about 2,000 U.S. workers sustain job-related eye injuries that require medical treatment.

60%

Nearly 60% injured workers were not wearing eye protection at the time of the accident or were wearing the wrong kind of eye protection for the job.

Safety should always come first. My heart sinks whenever I hear of accidents involving power tools. I hope this article can raise the awareness that Artificial Intelligence can offer us an extra level of protection.

Have fun making things and be safe !