The Naïve Bayes Classifier

Joseph Catanzarite

The Naïve Bayes Classifier is perhaps the simplest machine learning classifier to build, train, and predict with. This post will show how and why it works. Part 1 reveals that the much-celebrated Bayes Rule is just a simple statement about joint and conditional probabilities. But its blandness belies astonishing power, as we’ll see in Parts 2 and 3, where we assemble the machinery of the Naïve Bayes Classifier. Part 4 is a brief discussion, and Parts 5 and 6 list the advantages and disadvantages of the Naïve Bayes Classifier. Part 7 is a summary, and Part 8 lists a few references that I’ve found useful. Constructive comments, criticism, and suggestions are welcome!

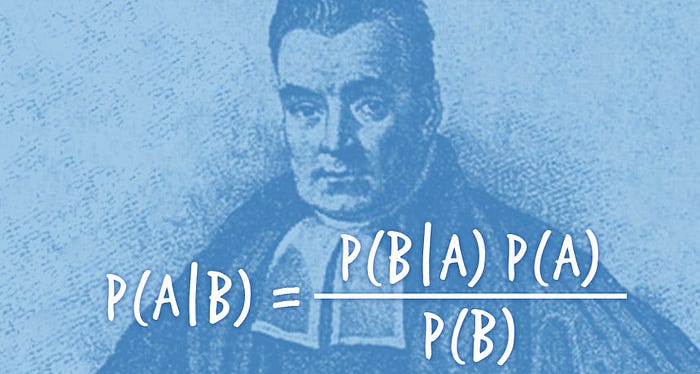

1. Prelude: Bayes’ Rule

Given two events A and B, the joint probability P(A,B) is the probability of A and B occurring together. It can be written in either of two ways:

The first way:

P(A,B) = P(A|B) * P(B)

Here P(A|B) is a conditional probability: the probability that A occurs, given that B has occurred. This says that the probability of A and B occurring together is (the probability that A occurs given that B has occurred) times (the probability that B has occurred).

The second way: