Member-only story

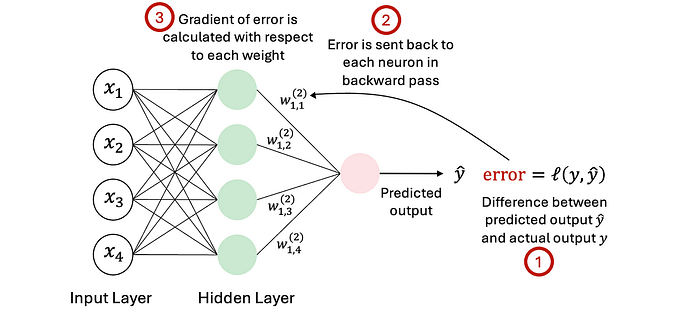

Stochastic-, Batch-, and Mini-Batch Gradient Descent

Why do we need Stochastic, Batch, and Mini Batch Gradient Descent when implementing Deep Neural Networks?

This is a detailed guide that should answer the questions of why and when we need Stochastic-, Batch-, and Mini-Batch Gradient Descent when implementing Deep Neural Networks.

In Short: We need these different ways of implementing gradient descent to address several issues we will most certainly encounter when training Neural Networks which are local minima and saddle points of the loss function and noisy gradients.

More on that will be explained in the following article — nice ;)

Table of Content

- 1. Introduction: Let’s recap Gradient Descent

- 2. Common Problems when Training Neural Networks (local minima, saddle points, noisy gradients)

- 3. Batch-Gradient Descent

- 4. Stochastic Gradient Descent

- 5. Mini-Batch Gradient Descent

- 6. Take-Home-Message