Thoughts and Theory

Presentation of the state of the art in speech synthesis research (also known as text-to-speech) at the end of May 2021 with a focus on deep learning technologies. I present a synthesis of 71 publications and give you the keys to understanding the underlying concepts.

Introduction

Voice is the most natural way we have to communicate. It is therefore natural that the evolution of conversational assistants is moving towards this means of communication. These virtual voice assistants can be deployed to help call centers by pre-qualifying a caller’s request, taking into account the simplest requests (e.g. making an appointment). For the visually impaired, provide a description of the texts on the screen or describe the scene in front of them. An operator can intervene on a machine to repair it by being helped by voice and he can interact with it with his hands-free, i.e. without having to handle a keyboard or a mouse. For several years, GPS navigation has been piloting you by voice.

In this paper, I will discuss the state of the art of speech synthesis research to date. I will present the techniques that are used to automatically generate signals from a sentence. After a short presentation of the subject, I will present the problem related to automatic synthesis, then I will present the processing pipelines, I will quickly explain what is a mel-spectrogram, then the deep generative models, the end-to-end systems, the actors of the research today, and I will continue by the available datasets allowing to realize the learning. I will explain how quality is measured and which conferences are presenting the work. I will finish with the remaining challenges.

TL;DR

Conversational voice assistants have reached a state of the art that puts them almost at the same level as humans in terms of speech recognition and "simple" (monotone) speech generation. Speech generation is a complex process that consists in generating several thousands of values representing the sound signal from a simple sentence. Neural networks have replaced traditional concatenative generation technologies by providing better signal quality, easier preparation of training data, and a generation time that has been reduced to the point of being able to generate a sentence several hundred times faster than a human would.

The generation of the signal is generally done in 2 main steps: a first step of generating a frequency representation of the sentence (the mel spectrogram) and a second step of generating the waveform from this representation. In the first step, the text is transformed into characters or phonemes. These are vectorized then an encoder-decoder type architecture will transform these input elements into a condensed latent representation (encoder) and transform this data inversely into a frequency representation via the decoder. The most commonly used technologies in this step are convolutional networks associated with attention mechanisms in order to improve the alignment between the input and the output. This alignment is often reinforced by duration, level, and tone prediction mechanisms. In the second stage, processed by a so-called vocoder, the 3-dimensional temporal frequency representation (time, frequency, and power) is transformed into a sound signal. Among the most efficient architectures are the GAN (Generative Adversarial Network) architectures where a generator will generate signals that will be challenged by a discriminator.

When these architectures are evaluated by a human, the quality level almost reaches the quality of the training data. Since it is difficult to do better than the input data, the research is now directed toward the contribution of elements that will make the generated signal even closer to reality with the addition of prosody, rhythm, and personality elements, and to be able to parameterize the generation more finely.

Virtual Voice Assistants

Three years ago now (in May 2018), Google CEO Sundar Pichai presented at the keynote of Google I/O, the phone recording of a virtual assistant (Google Duplex) conversing with an employee of a hair salon. This assistant was in charge of making an appointment for a third party. The most exceptional element at the time was the near-perfection of the call, the perfect mimicry of a real person making an appointment, with the addition of "Mm-hmm" during the conversation. The flow of the conversation was so perfect that I still wonder if it wasn’t a trick. This presentation presaged a coming revolution in the field of human relationship automation by voice.

Dream or reality, this functionality of delegating the reservation of a restaurant or a hairdresser to a virtual assistant is, 3 years later, only available in the United States. The service is nevertheless operational in other countries, but only to improve the reliability of Google Search engine and Maps schedules by automatically calling the merchant for verification. This innovative company shows us here its ability to build tools that can accompany humans in certain activities with an excellent level of quality. At least of sufficient quality for them to decide to provide this service.

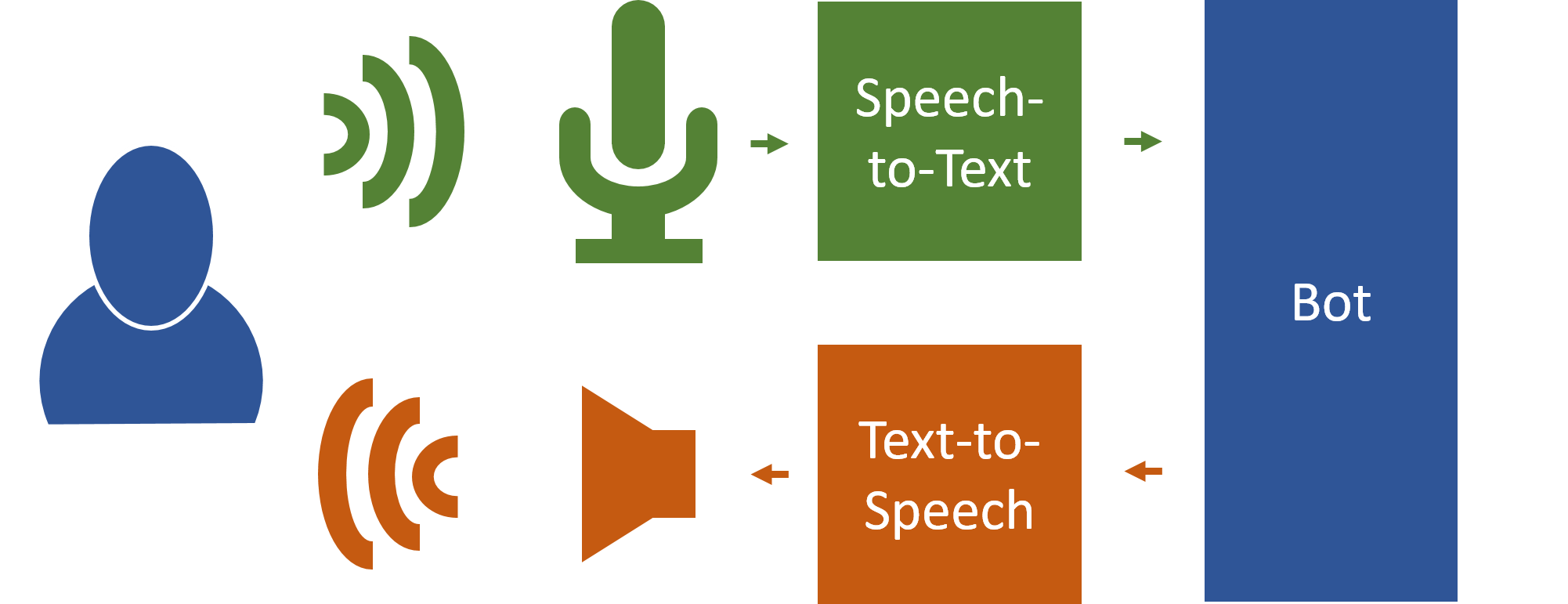

To implement a conversational voice assistant, it is necessary to have a processing chain where a first component transforms the user’s voice into text (Speech-to-Text). A second component (the Bot) analyzes the caller’s text and generates a response. A third and last component transforms the Bot answer into voice (Text-to-Speech). The result is played back to the user on a loudspeaker or through a telephone line.

Speech synthesis, also called Text-To-Speech or TTS, was for a long time realized by combining a series of transformations more or less dictated by a set of programming rules and a more or less satisfactory result at the output. In recent years, the contribution of deep learning has allowed the emergence of much more autonomous systems that are now capable of generating thousands of different voices with a quality close to a human. Systems today have become so efficient that they can clone a human voice from a few seconds of voice sample.

The One-to-many Problem

To generate an audio signal, the synthesis system will follow a set of more or less complex steps. One of the main problems that Voice Synthesis must address is that of one-to-many modeling, which consists of the ability to convert a piece of information (the sentence to be vocalized) into data (the waveform) that takes several thousand values. Moreover, this signal can have many different characteristics: volume, accentuation of a particular word, speed of diction, management of the end of the sentence, the addition of feelings, pitch… The problem for the system architect is thus to solve this complex processing by dividing this generation into steps that can be trained globally or individually.

Processing Pipelines

The first voice generation systems used air directly to produce sounds, then computer science brought systems that could use generation rules by parameters, to quickly adopt the generation of sentences by concatenation of diphones from a more or less consequent database of sounds (there are more than 1,700 diphones in English and 1,200 in French, to be duplicated by speaker, by sentence start/end, by prosody…)

Traditional speech synthesis systems are often classified into two categories: concatenative systems and generative parametric systems. The assembly of diphones falls into the category of concatenative speech synthesis. There are two different schemes for concatenative synthesis: one is based on Linear Prediction Coefficients (LPC), the other on Pitch Synchronous OverLap Add (PSOLA). The result is often flat, monotonous, and robotic, i.e. lacking a real prosody although it is possible to modulate the result. By prosody, we mean suprasegmental features such as intonation, melody, pauses, rhythm, flow, accent… This method has been improved with the creation of generative acoustic models based on Hidden Markov Models (HMM), and the implementation of contextual decision trees. Deep generative systems have become the standard now, dethroning the old systems that have become obsolete.

Following the one-to-many principle, the system to build consists of transforming the text into an intermediate state, then transforming this intermediate state into an audio signal. Most statistical parametric speech synthesis systems (SPSS) do not generate the signal directly but rather its frequency representation. A second component called a vocoder then finalizes the generation based on this representation. The principles of generative networks have become the norm in recent years with convolutional networks, then recurrent, VAE (2013), attention mechanisms (2014), GAN (2014), and other networks.

The diagram below describes the different components of a machine learning-based pipeline architecture used to generate speech.

Like any learning-based system, the generation is mainly composed of 2 phases: a learning phase and a generation phase (or inference phase). Sometimes, an "in-between" phase is inserted to perform a fine-tuning of the acoustic model with other data.

The appearance of the pipeline changes depending on the phase:

- During the learning phase, the pipeline allows the generation of models. The sentences are the inputs of the encoder/decoder and the voice files correlated with the sentences. To this is sometimes added the speaker’s ID. In many systems, a mel spectrogram is generated and a vocoder transforms this representation into a waveform. The inputs to the vocoder are the acoustic parameters (usually the mel spectrogram) and the voice associated with the parameters. The set of information extracted from the two synthesis analysis modules is known as the "linguistic features" (Acoustic Feature).

- During the generation phase, the pipeline is responsible for performing the inference (or synthesis or generation). The input is the sentence to be transformed, sometimes the speaker ID for the selection of the speech features that will match the generated voice. The output is a mel spectrogram. The vocoder’s role is to generate a final waveform from the compact representation of the audio to be generated.

In detail, the pipeline used for learning (Training) includes:

- A Text Analysis module that performs text normalization operations, transforms numbers into text, splits the sentence into parts (part-of-speech), transforms graphemes (written syllables) into phonemes (G2P), adds prosody elements, etc. Some systems process the characters of the text directly, others use only the phonemes. This module is often used "as is" during training and synthesis.

- An Acoustic Analysis module receives as input the acoustic characteristics associated with the text. This module can also receive the speaker ID during multi-speaker training. This module will analyze the differences between the theoretical features and the data generated during the training phase. Acoustic features can be generated from voice samples using "classical" signal processing algorithms such as Fast Fourier Transform. This module can also generate models to predict the duration of the signal (link between the phoneme and the number of samples of the mel spectrogram) and its alignment with the text. The latest systems tend to improve the prediction networks and add pitch prediction. At the end of 2020, Éva Székely from the EECS school in Stockholm adds breath processing in the learning stages, which reduces the distance between the human and the machine.

- The acoustic models from the training phase represent the latent states extracted from the sentence embedding vector, the speaker vector, and the acoustic features. In addition, there are prediction models for alignments and other features.

- A Speech Analysis module is used to extract the various parameters from the original voice files (Ground-Truth). In some systems, especially end-to-end systems, the silences in front and behind are removed. Extraction, which varies from system to system, can consist of extracting pitch, energy, stress, phoneme duration, fundamental frequency (1st harmonic frequency or F0), etc. from the input speech signals. These input voice files can be single or multi-speaker. In the case of multi-speaker systems, the speaker vector is added to the inputs.

The pipeline used for synthesis (Inference) includes:

- Based on the output of the text analysis module and through the acoustic model(s), the Feature Prediction module generates the compact speech representations necessary for the finalization of the generation. These outputs can be one or more of the following representations: the signal’s Mel spectrogram (MelS), the Bark scale cepstral coefficients (Cep), the linear scale logarithmic magnitude spectrograms (MagS), the fundamental frequency (F0), the spectral envelope, the aperiodicity parameters, the duration of the phonemes, the pitch height…

- The input of the vocoder can be one or more of the above representations. Many versions of this module exist and it tends to be used as a stand-alone unit at the expense of end-to-end systems. Among the most popular vocoders are Griffin-Lim, WORLD, WaveNet, SampleRNN, GAN-TTS, MelGAN, WaveGlow, and HiFi-GAN which provide a signal close to that of a human (see how to measure quality).

Early neural network-based architectures relied on the use of traditional parametric TTS pipelines such as; DeepVoice 1 and DeepVoice 2. DeepVoice 3, Tacotron, Tacotron 2, Char2wav, and ParaNet use attention-based seq2seq architectures (Vaswani et al., 2017). Speech synthesis systems based on Deep Neuronal Networks (DNNs) are now outperforming the so-called classical speech synthesis systems such as concatenative unit selection synthesis and HMMs that are (almost) no longer seen in studies.

The diagram below presents the different architectures, classified by year, of publication of the research paper. It also shows the links when a system uses features of a previous system.

An introduction to the mel spectrogram

The input of vocoders is generally constituted by what is called the mel spectrogram which is a particular representation of the vocal signal. This spectrogram is realized by applying several transformations to an audio signal (time/amplitude).

The first transformation consists in extracting the spectrum of a signal using a Short-Term Fast Fourier Transform (STFFT). The STFFT will decompose the audio signal by capturing the different frequencies that compose it as well as the amplitude of each frequency. Because of the variability of the signal over time, the signal is split into windowed segments (usually between 20ms and 50ms) that overlap in part.

The horizontal axis corresponds to the time scale, the vertical axis corresponds to the frequency, and the color of the pixel corresponds to the power of the signal in decibel (dB). The lighter the color, the more powerful the frequency. The frequency scale is then converted to a logarithmic scale.

Since the human ear does not perceive differences in frequencies in the same way whether they are low or high, Stevens, Volkmann, and Newmann proposed in 1937 a scale called the mel scale which gives a unit of pitch such that equal distances of pitch sound at equal distances from the listener. The frequencies of the spectrogram are thus transformed by this scale to become the mel spectrogram.

Deep Generative Models

The challenge of the voice generation system is to produce a large amount of data from a small amount of information, and even from no information. A sentence of 30 words, pronounced in 10 seconds at 22KHz, requires the generation of a sequence of 440.000 bytes (16 bits), that is to say, a ratio of 1 to 14.666.

The field of automatic generative modeling is a vast area that has virtually unlimited uses. Immediate applications are as diverse as image generation, and text generation popularized by GPT-2 and lately GPT-3. In our case, we expect them to realize the generation of voice. The so-called "classical" networks (CNN for Convolutional Neural Network) of the beginning have been replaced by more complex recurrent networks (RNN for Recurrent Neural Network) because they introduced the notion of the previous context which is important in the context of speech continuity. Today, this generation is mostly realized with deep model architectures such as DCCN (Dilated Causal Convolution Network), Teacher-Student, VAE (Variational Auto-Encoders), and GAN (Generative Adversarial Networks), exact likelihood models such as PixelRNN/CNN, Image Transformers, Generative Flow, etc.

The most common architectures are :

- The Autoregressive Model is a regression-based model for time series in which the series is explained by its past values rather than by other variables. In the case of speech generation, most early neural network-based models were autoregressive, which implied that future speech samples were conditioned on past samples in order to maintain long-term dependence. These models are quite easy to define and train but have the disadvantage of propagating and amplifying errors. And the generation time is proportional to the length of the sentence generated. They have above all the disadvantages of being serial and therefore do not benefit from the latest parallelization capabilities of GPU (Graphic Processor Unit) and TPU (Tensor Process Unit) processors. This makes it difficult to create real-time systems where it is necessary to respond to the user within a reasonable time. Introduced with WaveNet and ClariNet, non-autoregressive systems allow for generating voice samples without relying on previous generations, which allows a strong parallelization that is only limited by the memory of the processors. These systems are more complicated to implement and train, and are less accurate (internal dependencies are removed) but can generate all samples in milliseconds.

- Introduced in 2016 by Google and its popular WaveNet, the Dilated Causal Convolution Network (or DCCN) is a dilated causal convolution where the filter is applied to an area larger than its length by skipping input values with a certain step. This dilation allows the network to have very large receptive fields with only a few layers. Subsequently, many architectures have integrated this model into their generation chains.

- Flow architectures consist of a series of invertible transformations (Dinh et al., 2014 – Rezende and Mohammed, 2015). The term "flow" means that simple invertible transformations can be composed with each other to create more complex invertible transformations. The Nonlinear Independent Components Estimation (NICE) model and the Real Non-Volume Preserving (RealNVP) model compose the two common types of invertible transforms. In 2018, NVIDIA uses this technique by integrating the Glow (for Generative-Flow) technique into WaveGlow for generating the voice file from the mel spectrogram representation.

- Teacher-Student models involve two models: a pre-trained autoregressive model (the teacher) that is used to guide the non-autoregressive network (the student) to learn correct attentional alignment. The Teacher will note the outputs of the Student’s parallel feed-forward model. This mechanism is also called Knowledge Distillation. The Student’s learning criterion is related to inverse autoregressive flows, and other flow-based models have been introduced with WaveGlow. A major problem with these parallel synthesis models is their restriction to invertible transforms, which limits the capacity of the model.

- Variational Auto-Encoders (VAE) are an adaptation of auto-encoders. An auto-encoder consists of 2 neural networks that collaborate with each other. A first network is in charge of encoding the input into a continuous latent reduced representation, which can be interpolated (compressed form = z). A second network is responsible for reconstructing the input from the encoding by reducing the losses of the output. To limit the effects of overlearning, the learning is regularized in terms of means and covariance. In the case of voice generation, the encoder transforms the text into a latent state corresponding to the acoustic features and the decoder transforms this state into a sound signal. The encoder/decoder models borrow a lot from image generation since the underlying idea is to generate an image, i.e. the spectrogram. Thus PixelCNN, Glow, and BigGAN have been sources of ideas for TTS networks.

- Generative Adversarial Networks (GANs – Goodfellow et al.) emerged in 2014 to assist in image generation. They are based on the principle of opposing a generator and a discriminator. The generator is trained to generate images from data and the discriminator is trained to determine whether the generated images are real or fake. A team in San Diego (Donahue et al., 2018) has the idea of using this technique for generating audio signals (WaveGAN and SpecGAN). Many vocoders use this technology as a generating principle. GANs are among the best units for generating voice files.

Other systems exist, but are less common, such as the Diffusion Probabilistic Model consisting of modifying a signal via chains of Markov transitions such as adding Gaussian noise, the IAF…

The contribution of attention mechanisms has greatly improved seq2seq networks by removing the need for recurrence but it is still difficult to predict the correct alignment between input and output. Early networks used the content-based attention mechanism but induced alignment errors. In order to correct this problem, several other attention mechanisms were tested: the Gaussian Mixture Model (GMM) Attention mechanism, the Hybrid Location-Sensitive Attention mechanism, Dynamic Convolution Attention (DCA) and the Monotonic Attention (MA) alignment method.

The table below shows the different architectures of the main networks built in recent years.

End-to-end Systems

In February 2017, the University of Montreal presented Char2Wav corresponding to the combination of a bidirectional recurrent neural network, an attentional recurrent neural network, and a neural vocoder from SampleRNN. This end-to-end network allows the learning of waveform samples directly from the text and without going through an intermediate step such as a mel spectrogram. It is directly the output of the networks that are used as inputs to the output networks.

Char2Wav is composed of a player and a neural vocoder. The reader is an encoder-decoder with attention. The encoder accepts text or phonemes as input, while the decoder works from an intermediate representation. As stated by the University:

"Unlike traditional text-to-speech models, Char2Wav learns to produce audio directly from text."

In the same month, Baidu also developed a fully autonomous end-to-end system called DeepVoice. It is trained from a dataset of small audio clips and their transcription. More recently, Microsoft presented in 2020 a network called FastSpeech 2s that avoids the generation of the mel spectrogram but with a lower quality than its sister network generating the spectrum.

This end-to-end network architecture does not predominate in the voice generation landscape. Most architectures separate the grapheme-to-phoneme model, the duration prediction model, and the acoustic feature model for generating the mel-spectrogram, and the vocoder.

The latest publications and the Blizzard Challenge 2020 organized last year by the University of Science and Technology of China (USTC), with the help of the University of Edinburgh, confirm this state of affairs since the last challenge saw the disappearance of classical systems and the domination of SPSS (Statistical Parametric Speech Synthesis) systems based on the 2 generation stages. More than half of the competing teams used neural sequence-to-sequence systems (e.g. Tacotron) with the use of WaveRNN or WaveNet vocoders. The other half worked on approaches based on DNNs and these same vocoders.

Who are the research actors?

Of the 71 papers studied, the distribution of publications by company or university family shows a predominance of Web companies, followed closely by technology companies. Universities are only third.

The country of origin of the papers is first the United States, then China. In third place is South Korea. The country of the publisher corresponds to the location of the company’s headquarters.

Asia is just ahead of North America in terms of the number of publications (32 versus 30).

Google, and its subsidiary DeepMind (UK), is the company that has published the most in recent years (13 publications). We owe them papers on WaveNet, Tacotron, WaveRNN, GAN-TTS, and EATS. Followed by Baidu (7 publications) with papers on DeepVoice and ClariNet and Microsoft with papers on TransformerTTS and FastSpeech.

Where to find Datasets?

The mandatory annotation of each element of the speech sequences is no longer necessary. Today, it is enough to have a corpus containing the sentence and the voice in relation, the only elements necessary to generate the models. It is, therefore, possible to have many more samples than before, in particular via (in alphabetical order):

- Blizzard Speech Databases – Multi-language – Variable Sizes – The Blizzard Challenge competition provides each competitor with several speech samples for training during the challenge. These databases are available for free download at each competition.

- CMU-Arctic – US – 1.08 GB – The databases consist of approximately 1,150 carefully selected phrases from Project Gutenberg texts and are not copyrighted.

- Common Voice 6.1 – Multi-language – Variable sizes – Mozilla has launched an initiative to have a database to make speech recognition open and accessible to everyone. To do this, they have initiated a community project that allows anyone to recite sentences and check the recitations of others. The version 6.1 database for French represents 18 GB or 682 validated hours.

- European Language Resources Association – Multi-language – Variable sizes – Many paid corpora for commercial use.

- LDC Corpora-Databs – Multi-language – Variable Sizes – The Linguistic Data Consortium (LDC) is an open consortium of universities, libraries, corporations, and government research laboratories formed in 1992 to address the severe data shortage then facing research and development in language technologies. The LDC is hosted by the University of Pennsylvania.

- LibriSpeech — US – 57.14 GB – LibriSpeech is a corpus of about 1,000 hours of English speech read at a sampling rate of 16 kHz, prepared by Vassil Panayotov with the help of Daniel Povey. The data is derived from reading audiobooks from the LibriVox project and has been carefully segmented and aligned.

- LibriTTS — US – 78.42 GB – LibriTTS is a multi-speaker English corpus of 585 hours of English reading at a sampling rate of 24 kHz, prepared by Heiga Zen with the help of Google Speech and members of the Google Brain team.

- LibriVox — Multi-language – Variable Sizes – LibriVox is a group of volunteers from around the world who read and record public domain texts to create free audiobooks for download.

- LJ Speech – US – 2.6 GB – Surely one of the best known and most used datasets in model evaluations. It is a public domain speech dataset consisting of 13,100 short audio clips of a single speaker reading passages from 7 non-fiction books. A transcript is provided for each clip. The clips range in length from 1 to 10 seconds and have a total duration of approximately 24 hours. The texts were published between 1884 and 1964, and are in the public domain. The audio was recorded in 2016–2017 by the LibriVox project and is also in the public domain.

- VCTK — US – 10.94 GB – CSTR VCTK (Voice Clone ToolKit) includes speech data spoken by 109 English speakers with various accents. Each speaker reads approximately 400 sentences, which were selected from the Herald newspaper, the Rainbow Passage, and an elicitation paragraph. These sentences are the same for all participants.

Many datasets exist and it would be complicated to name them all: OpenSLR, META-SHARE, audiobooks… In spite of the profusion of speech data, it is nevertheless always difficult to find datasets that allow training the models on prosodic and emotional aspects.

How to measure quality?

In order to define the quality of the generated signal, there are no trivial or computerized tests as can be used to evaluate the performance of a classifier. Quality is evaluated on many factors including naturalness, robustness (the ability of the system not to forget words or duplicate), and accuracy.

Since it is imperative to check the quality to determine the performance of a model, this must be done by humans. Selected people who speak the language to be evaluated are asked to rate the audio quality of a sound signal. A Mean Opinion Score (MOS) is calculated from the scores obtained by polling the judgment of the quality (not standardized and therefore subjective) of the sound reproduction. The score varies from 1 for Poor to 5 for Excellent.

Selected listeners are invited to listen to generated audio files, sometimes in comparison with source files (Ground-Truth). After listening, they give a score and the average of the scores gives the MOS score. Since 2011, researchers can benefit from a well-described way of working, based on crowdsourcing approaches. The best known is the one called CrowdMOS framework using notably the crowdsourcing site Amazon Mechanical Turk (F. Ribeiro et al. – Microsoft – 2011).

Most laboratories provide an evaluation of their algorithms using this principle, which gives a general view of their performance against each other. It should be noted that the evaluation results are highly dependent on the speaker and the acoustic characteristics of the recording. On the other hand, the values are not directly comparable because they are often trained with different datasets. Nevertheless, it allows us to get an idea of the quality of the architecture in relation to each other. All the more so when the researchers carry out comparative tests of their model versus the models of their colleagues.

This graph provides the MOS value for each of the models studied. Only the MOS scores realized in the English language have been retained.

The MUSHRA method (for MUltiple Stimuli with Hidden Reference and Anchor) is also a frequently used listening test. Listeners are asked to compare mixed signals between natural speech and generated signals and they assign a score on a scale from 0 to 100.

In 2019, Binkowski et al. introduce a quantitative automatic test called the Fréchet DeepSpeech Distance (FDSD) which is an adaptation of the Fréchet distance applied to the calculation of the distance between 2 speech files. This test allows having a distance score between the generated signal and the original file. This score is notably used in generative adversarial systems.

Other metrics are also calculated such as RMSE (for Root Mean Square Error), NLL (for Negative Log-Likelihood), CER (for Character Error Rate), WER (for Word Error Rate), UER (for Utterance Error Rate), MCD (for Mel-Cepstral Distortion)…

Voice conferences

Several conferences deal with voice synthesis and we note that the dates of scientific publications often target one of these conferences. In particular, we will mention (in alphabetical order):

- ICASSP – The International Conference on Acoustics, Speech, and Signal Processing (ICASSP) is an annual conference organized by the IEEE. It takes place in June. Topics include acoustics, speech, and signal processing.

- ICLR – Established in 2013, the International Conference on Learning Representations (ICLR) conference takes place in May and deals with machine learning in general.

- ICML – Created in 1980, the International Conference on Machine Learning (ICML) is held every year in July. The submission of research papers takes place from late December to early February.

- Interspeech – Established in 1988, the Interspeech conference is held in late August/early September. The objective of the International Speech Communication Association (ISCA) is to promote activities and exchanges in all areas related to the science and technology of speech communication.

- NeurIPS – Created in 1987, the scientific conference in artificial intelligence and computational neuroscience called NeurIPS (for Neural Information Processing Systems) is held every year in December. It deals with all aspects of the use of machine learning networks and artificial intelligence.

The next challenges

In a conversational system, it is important that the conversation takes place as naturally as possible, i.e. without excessive pauses. The voice generation must therefore be almost instantaneous. A human being is able to react very quickly to a question, whereas a generation system must often wait until the end of the generation to give the signal back to the user (notably because it receives a whole sentence to transform). With the use of parallelism, the non-autoregressive systems that have appeared recently are clearly surpassing the old models since they are capable of splitting the production of the output signal into several activities in parallel and are thus able to produce the voice signal in a few milliseconds, regardless of the length of the sentence (this is known as Real-Time Factor or RTF). In telephone call centers, the conversational virtual assistant (also called callbot) must be able to react in a few seconds to a request or it will be perceived as inefficient or dysfunctional.

Voice generation systems are still often trapped by single letters, spelling, repeated numbers, long sentences, number to speech, etc., which leads to inconsistent quality depending on the input. Furthermore, some unusual peaks are still generated from the models and lead to dissonances in the produced signal. Since the human ear is extremely sensitive to such variations, the poor quality of the automatic generation is immediately detected and reduces the appreciation score. Even if huge improvements have been made, undoing these errors remains a challenge.

The size of the models and the space they occupy in memory is also an issue for future networks that will have to match networks with many parameters (several tens of millions of parameters) but with much fewer resources. CPU and memory are limited on a low-resource device such as a mobile or a box. DeviceTTS (Huand et al. – Speech Lab Alibaba Group – Oct. 2020) is able to generate voices with "only" 1.5 million parameters and 0.099 GFLOPS, and quality close to Tacotron and its 13.5 million parameters. LightSpeech (Luo et al. – USTC and Microsoft – Feb. 2021) based on FastSpeech 2 manages, without loss of MOS, to match it with its 1.8M parameters compared to the 27M of FastSpeech 2.

Most of the generated voices are often monotonous, and flat unless a data set is available that includes a variety of emotional expressions and multiple speakers. In order to indicate to the system variations in duration and rhythm to be applied to the output signal (prosody), there is a markup language called Speech Synthesis Markup Language (or SSML). Via a system of tags that surround the words to be varied, it is possible to apply a certain number of characteristics such as the pitch and its range (lower or higher), the contour, the rate, the duration, and the volume. It also allows you to define pauses, to say a word, etc. The latest publications tend to replace this tagging with other mechanisms such as expected prosody enunciation (Expressive TTS using style tag – Kim et al. 2021). Researchers are modifying current networks to add additional networks to modulate the generated signal and thus simulate emotion, emphasis, etc. (Emphasis-Li et al. 2018, CHiVE-Wan et al. 2019, Flavored Tacotron- Elyasi et al. 2021). Voice conversion techniques are also used: the signal is modified after generation to modulate it according to the destination.

Most systems are designed to generate a single voice corresponding to the voice that was used for training. There is some interest (especially in light of recent publications: Arik et al. 2018, Jia et al. 2018, Cooper et al. Attentron Choi et al. 2020, and SC-GlowTTS Casanova et al. 2021) in generating new voices without them having been seen during training. The zero-shot TTS (ZS-TTS) approach involves relying on a few seconds of speech to adapt the network to a new voice. This method is similar to voice cloning. The competition called The Multi-speaker Multi-style Voice Cloning Challenge is a challenge in which teams must provide a solution to clone the voice of a target speaker in the same or other languages. The results are also evaluated in a large-scale listening test. Another similar competition focuses more generally on voice conversion: the Voice Conversion Challenge.

There are more than 7,100 different languages in the world. This figure rises to 41,000 if we add all the dialects spoken (China, for example, has more than 540 languages spoken in its territory and India has more than 860 languages). Industrial speech synthesis systems often offer only a small part of this extremely diverse ecosystem. For example, Google Cloud Speech-to-text offers 41 languages and 49 if you add the country variations (e.g. French Canada and French France).

Current networks are able to reproduce a voice by learning transfer from a few seconds of the voice of the person to be cloned. As much as it allows a person who has lost his voice to have the possibility to generate it, it is now possible to impersonate someone else by recording his voice for a few minutes. It is thus possible to make a person say what you want or to try to impersonate a company by taking the voice of its manager. In parallel to the synthesis, the next challenge will be to provide systems capable of detecting these frauds. As humans are unable to pronounce the same sentence twice without making variations between the two pronunciations, it is thus possible to detect whether we are dealing with a robot or a human by simply asking him to repeat his sentence. However, you will need to have a good ear and a good auditory memory to make the difference!

Conclusion

Voice synthesis is an exciting universe because it touches us at the heart of our human status and it reaches quality levels today that are very close to a natural voice.

Universities and company research laboratories have gone beyond the stage of simple voice reproduction and have taken on other challenges such as increasing the speed of generation without lowering the quality, correcting the last generation errors, generating several different voices from a single speaker, adding prosody to the signal, etc.

The quality level of the voices produced to date is high enough to allow applications in all possible domains and especially in the context of voice-based conversational assistants.

What will you do with speech synthesis for your business uses?

References

CrowdMOS: An Approach For Crowdsourcing Mean Opinion Score Studies

Blizzard Challenge et Blizzard Challenge 2020

TTS synthesis with bidirectional LSTM based recurrent neural networks(2014), Yuchen Fan et al. [pdf]

WaveNet: A Generative Model for Raw Audio(2016), Aäron van den Oord et al. [pdf]

Char2Wav: End-to-end speech synthesis(2017), J Sotelo et al. [pdf] Deep Voice: Real-time Neural Text-to-Speech(2017), Sercan O. Arik et al. [pdf]

Deep Voice : Real-time Neural Speech-to-Text (2017), Arik et al. [pdf]

Deep Voice 2: Multi-Speaker Neural Text-to-Speech (2017), Sercan Arik et al. [pdf]

Deep Voice 3: 2000-Speaker Neural Text-to-speech (2017), Wei Ping et al. [pdf]

Parallel WaveNet: Fast High-Fidelity Speech Synthesis (2017), Aaron van den Oord et al. [pdf]

Tacotron: Towards End-to-End Speech Synthesis(2017), Yuxuan Wang et al. [pdf]

VoiceLoop: Voice Fitting and Synthesis via a Phonological Loop(2017), Yaniv Taigman et al. [pdf]

ClariNet: Parallel Wave Generation in End-to-End Text-to-Speech(2018), Wei Ping et al. [pdf]

FastSpeech: Fast, Robust and Controllable Text to Speech(2019), Yi Ren et al. [pdf]

MelNet: A Generative Model for Audio in the Frequency Domain(2019), Sean Vasquez et al. [pdf]

Multi-Speaker End-to-End Speech Synthesis(2019), Jihyun Park et al. [pdf]

[TransformerTTS] Neural Speech Synthesis with Transformer Network(2019), Naihan Li et al. [pdf]

[ParaNet] Parallel Neural Text-to-Speech(2019), Kainan Peng et al. [pdf]

WaveFlow: A Compact Flow-based Model for Raw Audio(2019), Wei Ping et al. [pdf]

Waveglow: A flow-based generative network for speech synthesis(2019), R Prenger et al. [pdf]

[EATS] End-to-End Adversarial Text-to-Speech(2020), Jeff Donahue et al. [pdf]

FastSpeech 2: Fast and High-Quality End-to-End Text to Speech(2020), Yi Ren et al. [pdf]

HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis(2020), Jungil Kong et al. [pdf] Location-Relative Attention Mechanisms For Robust Long-Form Speech Synthesi(2020), Eric Battenberg et al. [pdf]

Merlin: An Open Source Neural Network Speech Synthesis System (2016) Wu et al.

WaveGAN / SpecGAN ADVERSARIAL AUDIO SYNTHESIS (2018) Donahue et al. [pdf]

WaveRNN fficient Neural Audio Synthesis (2018) Kalchbrenner et al. [pdf]

FFTNet: a Real-Time Speaker-Dependent Neural Vocoder (2018) Jin et al. [pdf]

[SEA] SAMPLE EFFICIENT ADAPTIVE TEXT-TO-SPEECH (2018) Chen et al. [pdf]

FloWaveNet : A Generative Flow for Raw Audio (2018) Kim et al. [pdf]

DurIAN: Duration Informed Attention Network For Multimodal Synthesis (2019) Yu et al. [pdf]

GAN-TTS HIGH FIDELITY SPEECH SYNTHESIS WITH ADVERSARIAL NETWORKS (2019) Binkowski et al. [pdf]

MelGAN: Generative Adversarial Networks for Conditional Waveform Synthesis (2019) Kumar et al. [pdf]

SqueezeWave: Extremely Lightweight Vocoders for On-device Speech Synthesis (2020) Zhai et al. [pdf]

RobuTrans: A Robust Transformer-Based Text-to-Speech Model (2020) Li et al. [pdf]

TalkNet: Fully-Convolutional Non-Autoregressive Speech Synthesis Model (2020) Beliaev et al. [pdf]

WG-WaveNet: Real-Time High-Fidelity Speech Synthesis without GPU (2020) Hsu et al. [pdf]

WaveNODE: A Continuous Normalizing Flow for Speech Synthesis (2020) Kim et al. [pdf]

FastPitch: Parallel Text-to-speech with Pitch Prediction (2020) Lancucki et al. [pdf]

SpeedySpeech: Efficient Neural Speech Synthesis (2020) Vainer et al. [pdf]

WaveGrad: Estimating Gradients for Waveform Generation (2020) Chen et al. [pdf]

DiffWave: A Versatile Diffusion Model for Audio Synthesis (2020) Kong et al. [pdf]

Parallel Tacotron: Non-Autoregressive and Controllable TTS (2020) Elias et al. [pdf]

Efficient WaveGlow: An Improved WaveGlow Vocoder with Enhanced Speed (2020) Song et al. [pdf]

Reformer-TTS: Neural Speech Synthesis with Reformer Network (2020) Ihm et al. [pdf]

Wave-Tacotron: Spectrogram-free end-to-end text-to-speech synthesis (2020) Weiss et al. [pdf]

s-Transformer: Segment-Transformer for Robust Neural Speech Synthesis (2020) Wang et al. [pdf]

AdaSpeech: Adaptive Text to Speech for Custom Voice (2021) Chen et al. [pdf]

Diff-TTS: A Denoising Diffusion Model for Text-to-Speech (2021) Jeong et al. [pdf]

AdaSpeech 2: Adaptive Text to Speech with Untranscribed Data (2021) Yan et al. [pdf]

Grad-TTS: A Diffusion Probabilistic Model for Text-to-Speech (2021) Popov et al. [pdf]