You're reading for free via Agustinus Nalwan's Friend Link. Become a member to access the best of Medium.

Solving My Wife’s Problem ‘What Should I Wear Today?’ With AI

Overview

This question pops up from my wife nearly everyday.

What should I wear Today?

It is a tough question to answer since firstly I have no sense of fashion while Yumi is quite the contrary and she also has a degree in Fashion Design. Secondly, I have a bad memory of remembering what clothes she wore in the past few weeks to be able to provide diversity to my answer.

So, one day I have decided to spend my weekends to build a tech that I think will solve her problem.

Before I start I’d like to thank her for all the support she gave me in this project and my all other crazy projects. Honey, you are the best!

Research

I started doing some research to find out what do I need to build. I tried to put myself in her situation, what would the information I need to come up with the decision of what Clothes To Wear Today.

What clothes do I have? (CH)

Obviously I need to recommend a clothes from the catalogues I currently own. So I gather that this is a must have information.

What did I wear in the past few weeks? (CD)

Surely you don’t want to wear the same clothes again and again for a few days in a row. Even though it’s not super critical, knowing what you wear in the past few weeks will be useful for providing more diversity of recommendation. On top of that, an information of what the weather and event you were going to when you wore that clothes will also be useful to the recommendation system to be able to know which clothes suits which occasion and weather.

Today’s weather (W)

No brainer that what you wear is influenced by the weather on that day.

Event (E)

When you are going to a party, you want to wear something a little flashy like a dress however if you are going for hiking, you want to wear something a little sporty.

To sum up, The Clothes To Wear Today (CTWT) can be described by the following function:

CTWT = Func(CH, CD, W, E)

All I need to build is a system which perform such function :) Sound simple huh? I started to let my imagination go wild and end up visualising a voice assistant device with screen on her wardrobe which she can ask “What should I wear today?” and answers with photos of recommended clothes. Even better, it will show what she looks like wearing those clothes to provide more visual context. It also able to explain the justification to such recommendation. Why? So that it can answer Yumi’s follow up question “Why do you recommend me that?” normally after I give my recommendation.

This tech definitely will involved a lot of AI. Excited, I started looking for a name and a persona for this AI and come up with faAi. It is pronounced as “Fei” which stands for Fashion Assistant Artificial Intelligence.

Plan of Action

I started jotting down tasks that I needed to execute:

- Building a photo diary of what she wear everyday (CD), tagged with event and weather.

- Building a photo catalogue of clothes she own (CH)

- Building a voice assistant bot to be mounted on her wardrobe

An easy way to build a clothes catalogue would be to take a photo of all clothes she own one by one. And for the diary is to take a daily selfie. However as an advocate to Customer Experience and Automation, this is a no no to me. I need a system which automatically build up her clothes catalogue and diary with zero effort.

After some thinking, I came up with this plan. What if I mount a camera somewhere in the house which automatically take a photo of her full body. The camera should be smart enough to only take a photo of her, not me nor my parents nor my in laws (yes, in case u wonder, both my parents and in laws are living with us from time to time and luckily they get along well). It also needs to able able to identify the clothes she wear, to store them into clothes diary and only store unique ones into clothes catalogue.

Apart from a camera I also need a computing device to perform all the smarts above. The perfect device for this would be AWS DeepLens. It’s a deep learning enabled camera which you can deploy myriads of AI models right on the device (on-edge).

From this point I started to realise that this is going to be a big project. I will definitely need to build an AI model which spot a face and body in a video frame and perform Facial Recognition. If Yumi’s face is identified, the next AI model need to capture her clothing and perform a search if we already added this clothes in her catalogue, otherwise create a new entry. Regardless, if it’s a newly seen outfit, an entry will also be added into clothes diary, tagged with weather and event.

To keep my sanity, I decided to split this project into several phases with the first phase just to focus on automatically building the clothes diary. As I don’t need to build the clothes catalogue, I don’t need to build the clothes identification system just yet. Even though the end goal would be to have a Voice Assistant on her wardrobe, however I would like to have something that she can already use at the completion of the first phase. So, I added an extra task to build a mobile app which she can use to browse her clothes diary to be able to at least assist her in deciding what to wear.

Automatic Clothes Diary Builder

My first task is to find the location where I should mount this camera. Mounting the camera in her wardrobe might not be such a great idea, as I don’t really want to accidentally expose photo I shouldn’t expose if you know what I mean. Besides, I only want to add the clothes into her diary only if she is going out wearing them. There is no point to record her wearing pjs around the house.

After some searching, I found the perfect spot. On top of a cabinet, directly viewing my front door. Well, you need to go through the front door to be able to exit the house.

Detecting a Person and a Face with AWS DeepLens

My next task is to build an AI Object Detection model running inside my AWS DeepLens, which detects the presence and location of persons and faces. Faces to identify who they are and persons to crop an image of their full body to be stored into the clothes diary.

I used Amazon SageMaker to build a custom Object Detection model and deployed them into the AWS DeepLens. However in the interest to make this blog tells the story at a higher level, I will publish the full details in a separate blog.

AWS DeepLens comes with a super useful feature called Project Stream where you can display and annotate video frame and watch them on your web browser. This way, I can easily observe my model’s performance and draw some texts and boxes to assist me with debugging. As you can see in the image above, my model is accurately identifying Yumi’s full body and her face. I am quite happy with the accuracy.

Detecting Persons At The Right Location

Next problem to solve is, to only trigger the capture when the person is near the door area, which may indicates an intent to exit the house. While, an example footage below shows a false positive detection where a person is simply walking pass from front living room towards dining room.

Looking at my house floor plan below shows clearly the two red arrows which indicate the walking paths of people which cause false positive detection. Whilst we only want to capture if the person is in the green region. The easiest solution to this is to only capture a person when the top and bottom part of the person’s bounding box is fully inside the screen. Testing this logic with the false positive case above will correctly skip this image, since the bottom part of the bounding box is outside the screen.

While the positive case below will trigger a correct image capture as both top and bottom part of the bounding box are fully inside the screen.

In order to make sure that I have covered all possible false positive cases, I need to run the system for the whole day. First, I will have to complete the next part of the system which sends the cropped image of the detected person into my Image Logger S3 bucket. This way I can run the system for a day without my supervision and simply check all the images in that bucket at the end of the trial run.

The best way to send an image out of IoT device is via MQTT messaging system, which is surprisingly pretty easy to do, especially with great example codes has been provided when you created your AWS DeepLens project. I just need to add a few extra lines of code to encode the cropped image into a jpeg stream and base64 encode them so I can send it via MQTT as a string message.

client = greengrasssdk.client('iot-data')

iotTopic = '$aws/things/{}/infer'.format(

os.environ['AWS_IOT_THING_NAME'])

personImageRaw = cv2.imencode('.jpg', personImage)[1]

personImageStr = base64.b64encode(personImageRaw)

client.publish(topic=iotTopic, payload=personImageStr)The message will be received by the MQTT topic subscriber in the cloud which will then trigger my lambda function to base64 decode the image and save them into an S3 bucket. Decoding the image from an MQTT string is super easy to do with just one line of code below.

jpgStream = base64.b64decode(event)With all the above in place, I ran the system for the whole day and enjoyed the rest of my weekend :)

Mother in Law Avoidance

After running the system for the whole day, my Image Logger is showing lots of interesting images. Some of the images are correct detections as seen below, which is good.

Seeing my other family members being recorded is expected, however something else caught my attention. There are lot of images of my mother in law (her upper half body to be more specific) dominating 95% of all captured images.

Inspecting the raw video footage log below reveal the reason why.

Obviously, she passed the detection logic as the top and bottom part of her person’s bounding box (which only half her body was detected due to occlusion with kitchen bench) is fully inside the screen. The system captures a lot of her photo as she spent a lot of time in the kitchen. This will cause the process further down the track to do a lot of unnecessary facial recognition, which is not good. However, seeing countless photos of her being recorded throughout the day reminded me of how busy she is everyday preparing meal for us and helping with our baby. She is the best mother in law in the world to me…

Ok, despite how great she is, I don’t want her photos dominating the processing pipeline further down the track. This problem is simply fixed by introducing an exclusion zone. The idea is to exclude detection on the left part of the exclusion line (shown as a red line below)

Running the system again for the whole day shows no more mother in law behind the kitchen bench!

Detecting The Right Person

My next challenge is to add a facial recognition and only to record an image of Yumi or myself. Just to clarify, not that I need to use this system to recommend me clothes to wear, but instead so that I can test the system myself easily without keep bugging her to pose in front of the camera.

I really wanted to have the facial recognition done on the edge to save cost. However, after further investigation this seems to be hard. A proper Facial recognition system is pretty GPU intensive, which will add a significant processing time to an already slow system. Currently with my face/person detection, the system is running at 1 FPS. Any slower than this will decrease the chance of getting a good capture. A simpler facial recognition system like described here won’t work for me as it can only recognise a direct front facing face.

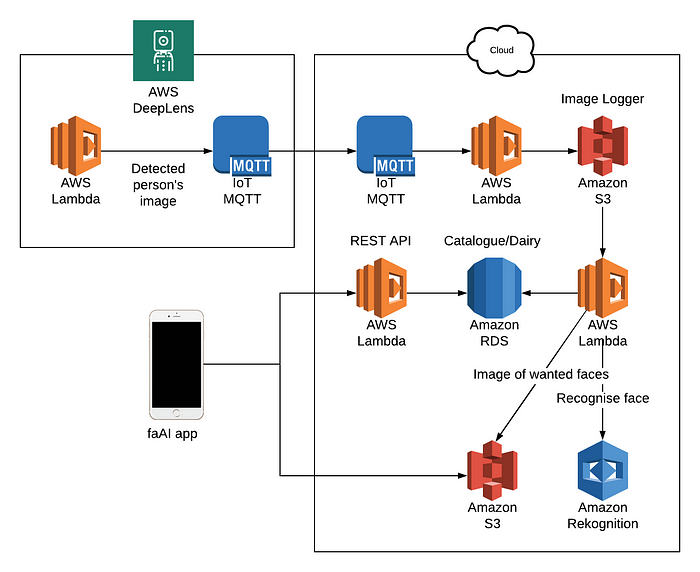

With the above, I decided to do the facial recognition in the cloud. I setup another lambda function which will be triggered when there is a new entry in my Image Logger. This lambda function will then call AWS Rekognition — Facial Recognition to identify the person. A Postgres database entry is created if the person is identified to be myself or Yumi. This Postgres database is the clothes diary we have been talking about. The time, temperature and weather condition (sunny, raining, cloudy) when the image was captured and the name of the person identified are also stored as part of the entry. Current temperature and weather are obtained by making an RSS feed call to weather bureau. And finally, the image will also be copied into another S3 bucket which acts as a public image server which will be accessible by my mobile app. This way I can make sure that only restricted sets of images are publicly available and in the future I even can add extra checks to make sure there are no sensitive images are publicly accessible.

Using AWS Rekognition — Facial Recognition is pretty straight forward. I simply need to create a face collection containing face I want the system to recognise. For each face (myself and Yumi), I need to call IndexFace to add them into the collection. Go here to find out more about this. Checking if a face is in the collection is as easy as just making one API call.

Building a Fashion Diary App

This is the most exciting part of the first phase, as finally I will be able to visualise the clothes diary on a mobile app. Both of us are iPhone user, so the mobile app has to work on iPhone. There are many ways to build a mobile apps these days such as using HTML5 framework like PhoneGap, cross development framework like Ionic and Xamarin or going straight using XCode. However being an iOS developer in my past live, the obvious choice for me is XCode.

In order to provide access to my Postgres clothes diary, I built a public REST API using a lambda function. I was quite impressed with how easy it is to build a functional REST API with it. Traditionally you will spend majority of the development time dealing with the infrastructure and deployment, such as writing the REST application skeleton, URL Routing, deployment script, etc rather than writing the actual API code. It is more prevalent if the API you need to write is so simple like in my case.

And finally, below is the overall end to end architecture diagram.

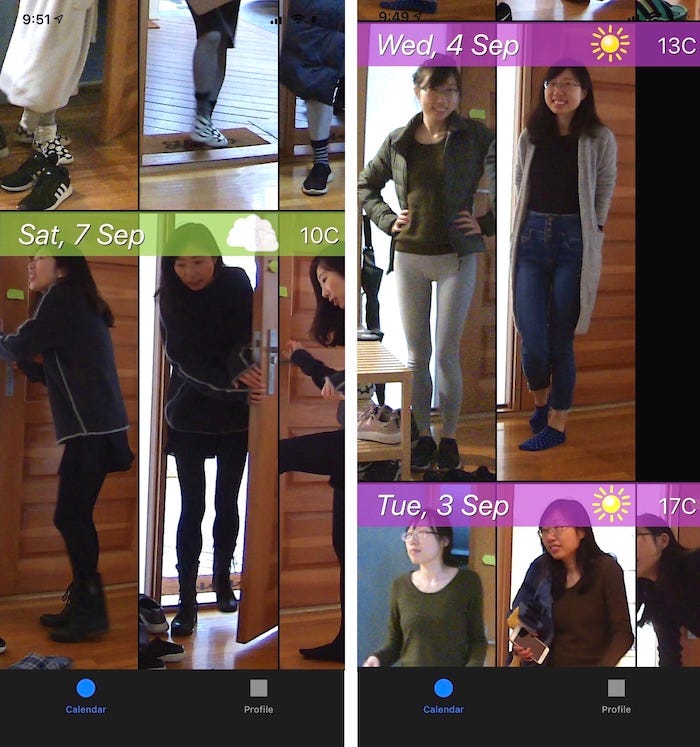

I managed to whip up the app and the REST API in one day, including designing the app splash screen and icon :) The app interface as seen below is pretty straight forward. A scrollable vertical list which shows recorded images of Yumi, grouped by date. A weather (raining, cloudy or sunny) icon and temperature of the day are added for an extra nice touch. I can now say that the system is Dev Complete! As an experienced software developer, I know that the next obvious step would be a QA.

QA

I run the system for the whole week and seems like its doing its job correctly. I got shots of Yumi entering and exiting the house, and not a single false positive! I also installed the app on her phone so she can start using it and giving me feedback.

Despite the great result, there are a few things that I need to improve and add in the next phase.

Better Pose Detection

Half of images were captured at a non-ideal pose. E.g. Facing sideways awkwardly with one leg up as she reaches the shoe changing bench, carrying our baby or wearing a jacket which covers the clothes she actually wears. I also found a few instances that she is not actually going out of the house but standing right in front of the door wearing pjs. faAi is not smart yet in a way that it will just take a photo whenever it detected a face regardless the quality of pose.

This is not really a big deal for this phase as the goal is to have a mobile app where she can browse through the clothes dairy. She can mentally filter herself easily the bad pose or irrelevant photos among the rest. In order to fix this properly, I need a more complex system which I will attempt in the future phase. For now, the simplest solution is to let her take off jacket, face the camera and quickly pose nicely when she is exiting or entering the house so the first few photos taken were good.

Event Detection

This is task which I just haven’t got time to complete in this phase and I will tackle in the next phase. Knowing the event why she is going out of the house will also help filter out unwanted captures. E.g. when she is going out to just throw rubbish.

That’s it for now, I am pretty happy that one milestone has been ticked, however many more challenges are still there for me to solve to have a complete end to end system. But what is live without a challenge?

Read the sequel to this blog which talk about how I teach faAi to identify cloth here.