Run Llama 2 70B on Your GPU with ExLlamaV2

Finding the optimal mixed-precision quantization for your hardware

The largest and best model of the Llama 2 family has 70 billion parameters. One fp16 parameter weighs 2 bytes. Loading Llama 2 70B requires 140 GB of memory (70 billion * 2 bytes).

In a previous article, I showed how you can run a 180-billion-parameter model, Falcon 180B, on 100 GB of CPU RAM thanks to quantization.

Llama 2 70B is substantially smaller than Falcon 180B.

Can it entirely fit into a single consumer GPU?

This is challenging. A high-end consumer GPU, such as the NVIDIA RTX 3090 or 4090, has 24 GB of VRAM. If we quantize Llama 2 70B to 4-bit precision, we still need 35 GB of memory (70 billion * 0.5 bytes). The model could fit into 2 consumer GPUs.

With GPTQ quantization, we can further reduce the precision to 3-bit without losing much in the performance of the model. A 3-bit parameter weighs 0.375 bytes in memory. Llama 2 70B quantized to 3-bit would still weigh 26.25 GB. It doesn’t fit into one consumer GPU.

We could reduce the precision to 2-bit. It would fit into 24 GB of VRAM but then the performance of the model would also significantly drop according to previous studies on 2-bit quantization.

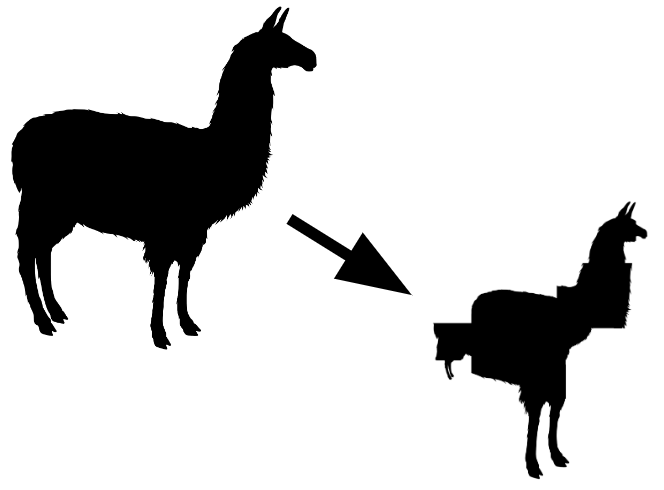

To avoid losing too much in the performance of the model, we could quantize important layers, or parts, of the model to a higher precision and the less important parts to a lower precision. The model would be quantized with mixed precision.

ExLlamaV2 (MIT license) implements mixed-precision quantization.

In this article, I show how to use ExLlamaV2 to quantize models with mixed precision. More particularly, we will see how to quantize Llama 2 70B to an average precision lower than 3-bit.

I implemented a notebook demonstrating and benchmarking mixed-precision quantization of Llama 2 with ExLlamaV2. It is available here:

Quantization of Llama 2 with Mixed Precision

Requirements

To quantize models with mixed precision and run them, we need to install ExLlamaV2.

Install it from source:

git clone https://github.com/turboderp/exllamav2

cd exllamav2

pip install -r requirements.txtWe aim to run models on consumer GPUs.

- Llama 2 70B: We target 24 GB of VRAM. NVIDIA RTX3090/4090 GPUs would work. If you use Google Colab, you cannot run it on the free Google Colab. Only the A100 of Google Colab PRO has enough VRAM.

- Llama 2 13B: We target 12 GB of VRAM. Many GPUs with at least 12 GB of VRAM are available. RTX3060/3080/4060/4080 are some of them. It can run on the free Google Colab with the T4 GPU.

How to quantize with mixed precision using ExLlamaV2

The quantization algorithm used by ExLlamaV2 is similar to GPTQ. But instead of choosing one precision type, ExLlamaV2 tries different precision types for each layer while measuring quantization errors. All the tries and associated error rates are saved. Then, given a target precision provided by the user, the ExLlamaV2 algorithm will quantize the model by choosing for each layer’s module the quantization precision that leads, on average, to the target precision with the lowest error rate.

During quantization, ExLlamaV2 outputs all the tries:

Quantization tries for the 10th layer’s up_proj module of Llama 2 13B

-- Linear: model.layers.10.mlp.up_proj

-- 0.05:3b/0.95:2b 32g s4 2.18 bpw rfn_error: 0.21867

-- 0.25:3b/0.75:2b 32g s4 2.38 bpw rfn_error: 0.20617

-- 0.25:4b/0.75:2b 32g s4 2.63 bpw rfn_error: 0.20230

-- 0.1:4b/0.4:3b/0.5:2b 32g s4 2.73 bpw rfn_error: 0.18449

-- 0.1:4b/0.9:3b 32g s4 3.23 bpw rfn_error: 0.10229

-- 0.2:6b/0.8:3b 32g s4 3.73 bpw rfn_error: 0.09791

-- 1.0:3b 128g s4 3.03 bpw rfn_error: 0.11354

-- 1.0:3b 32g s4 3.13 bpw rfn_error: 0.10491

-- 0.05:4b/0.95:3b 32g s4 3.18 bpw rfn_error: 0.10363

-- 0.4:4b/0.6:3b 32g s4 3.53 bpw rfn_error: 0.09272

-- 0.6:4b/0.4:3b 64g s4 3.66 bpw rfn_error: 0.08835

-- 1.0:4b 128g s4 4.03 bpw rfn_error: 0.05756

-- 1.0:4b 32g s4 4.13 bpw rfn_error: 0.05007

-- 0.1:5b/0.9:4b 32g s4 4.23 bpw rfn_error: 0.04889

-- 0.1:6b/0.9:4b 32g s4 4.33 bpw rfn_error: 0.04861

-- 1.0:5b 128g s4 5.03 bpw rfn_error: 0.02879

-- 0.1:6b/0.9:5b 32g s4 5.23 bpw rfn_error: 0.02494

-- 0.05:8b/0.05:6b/0.9:5b 32g s4 5.33 bpw rfn_error: 0.02486

-- 0.4:6b/0.6:5b 32g s4 5.53 bpw rfn_error: 0.02297

-- 0.1:8b/0.3:6b/0.6:5b 32g s4 5.73 bpw rfn_error: 0.02280

-- 1.0:6b 128g s4 6.03 bpw rfn_error: 0.01503

-- 1.0:6b 32g s4 6.13 bpw rfn_error: 0.01471

-- 0.1:8b/0.9:6b 128g s4 6.23 bpw rfn_error: 0.01463

-- 1.0:8b 32g s4 8.13 bpw rfn_error: 0.00934

-- Time: 19.57 secondsWe can see that the error rate decreases as the quantization precision (bpw, i.e., bits per weight) increases, as expected.

Quantization with ExLlamaV2 is as simple as running the convert.py script:

Note: convert.py is in the root directory of ExLlamaV2

python convert.py \

-i ./Llama-2-13b-hf/ \

-o ./Llama-2-13b-hf/temp/ \

-c test.parquet \

-cf ./Llama-2-13b-hf/3.0bpw/ \

-b 3.0ExLlamaV2 doesn’t support Hugging Face libraries. It expects the model and the calibration dataset to be stored locally.

The script’s main arguments are the following:

- input model (-i): A local directory that contains the model in the “safetensors” format.

- dataset used for calibration (-c): We need a dataset for calibrating the quantization. It must be stored locally in the “parquet” format.

- output directory (-cf): The local directory in which the quantized model will be saved.

- Target precision of the quantization (-b): The model will be quantized with a mixed precision which will be on average the targeted precision. Here, I chose to target a 3-bit precision.

This quantization took 2 hours and 5 minutes. I used Google Colab PRO with the T4 GPU and high CPU RAM. It didn’t consume more than 5 GB of VRAM during the entire process, but there was a peak consumption of 20 GB of CPU RAM.

The T4 is quite slow. The quantization time could be reduced with Google Colab V100 or an RTX GPU. Note: It’s unclear to me how much the GPU is used during quantization. It might be that the CPU speed has more impact on the quantization time than the GPU.

To quantize Llama 2 70B, you can do the same.

What precision should we target so that the quantized Llama 2 70B would fit into 24 GB of VRAM?

Here is the method you can apply to decide on the precision of a model given your hardware.

Let’s say we have 24 GB of VRAM. We should also always expect some memory overhead for inference. So let’s target a quantized model size of 22 GB.

First, we need to convert 22 GB into bits:

- 22 GB = 2.2e+10 bytes = 1.76e+11 bits (since 1 byte = 8 bits)

We have 1.76e+11 bits (b) available. Llama 2 70B has 7e+10 parameters (p) to be quantized. We target a precision that I denote bpw.

- bpw = b/p

- bpw = 176 000 000 000 / 70 000 000 000 = 2.51

So we can afford an average precision of 2.51 bits per parameter.

I round it to 2.5 bits.

To quantize Llama 2 70B to an average precision of 2.5 bits, we run:

python convert.py \

-i ./Llama-2-70b-hf/ \

-o ./Llama-2-70b-hf/temp/ \

-c test.parquet \

-cf ./Llama-2-70b-hf/2.5bpw/ \

-b 2.5This quantization is also feasible on consumer hardware with a 24 GB GPU. It can take up to 15 hours. If you want to use Google Colab for this one, note that you will have to store the original model outside of Google Colab's hard drive since it is too small when using the A100 GPU.

Running Llama 2 70B on Your GPU with ExLlamaV2

ExLlamaV2 provides all you need to run models quantized with mixed precision.

There is a chat.py script that will run the model as a chatbot for interactive use. You can also simply test the model with test_inference.py. This is what we will do to check the model speed and memory consumption.

For testing Llama 2 70B quantized with 2.5 bpw, we run:

python test_inference.py -m ./Llama-2-70b-2.5bpw/ -p "Once upon a time,"Note: “-p” is the testing prompt.

It should take several minutes (8 minutes on an A100 GPU). ExLlamaV2 uses “torch.compile”. According to PyTorch documentation:

torch.compile makes PyTorch code run faster by JIT-compiling PyTorch code into optimized kernels, all while requiring minimal code changes.

This compilation is time-consuming but cached.

If you run test_inference.py, again it should take only 30 seconds.

The model itself weighs exactly 22.15 GB. During my inference experiments, it occupied exactly 24 GB. It barely fits on our consumer GPU.

Why it doesn’t only consume 22.15 GB?

The model in memory actually occupies 22.15 GB but the inference itself also consumes additional memory. For instance, we have to encode the prompt and store it in memory. Also, if you set a higher max sequence length or do batch decoding, inference will consume more memory.

I used the A100 of Google Colab for this experiment. If you use a GPU with 24 GB, you will likely get a CUDA out-of-memory error during inference, especially if you also use the GPU to run your OS graphical user interface (e.g., Ubuntu Desktop consumes around 1.5 GB of VRAM).

To give you some margin, targeting a lower bpw. 2.4 or even 2.3 would leave several GB of VRAM available for inference.

ExLlamaV2 models are also extremely fast. I observed a generation speed between 15 and 30 tokens/second. To give you a point of comparison, when I benchmarked Llama 2 7B quantized to 4-bit with GPTQ, a model 10 times smaller, I obtained a speed of around 28 tokens/sec using Hugging Face transformers for generation.

Conclusion

Quantization to mixed-precision is intuitive. We aggressively lower the precision of the model where it has less impact.

Running huge models such as Llama 2 70B is possible on a single consumer GPU.

Be sure to evaluate your models quantized with different target precisions. While larger models are easier to quantize without much performance loss, there is always a precision under which the quantized model will become worse than models, not quantized, but with fewer parameters, e.g., Llama 2 70B 2-bit could be significantly worse than Llama 2 7B 4-bit while still being bigger.