RetinaNet: The beauty of Focal Loss

The one-stage object detection model that changed the game!

Object Detection has been a boon for applications across a multitude of domains. Hence, it is safe to say that it warrants more research than some of the other fields. Over the years, researchers have worked relentlessly on improving the Object Detection algorithms and have successfully done so, to a level where I cannot compare the performance metrics of object detection algorithms that are 5 years old with the ones today. So for a more holistic view, I want to build the premise of how we arrived here.

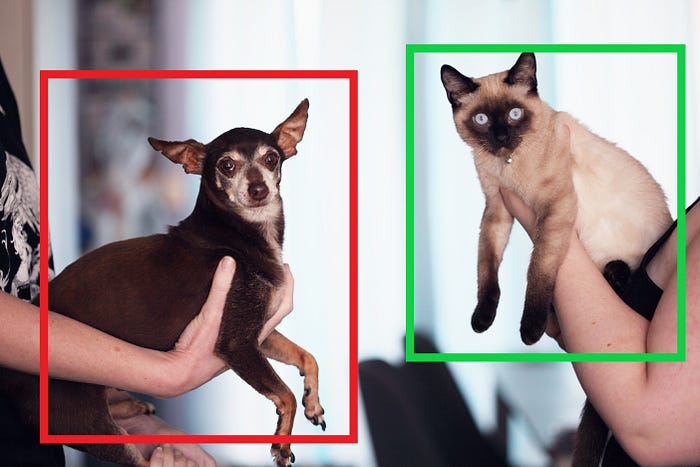

Object Detection

Object Detection had started off as a two-phase implementation where it detects the object in the image in the first phase (Localization) and classifies it in the second (Classification). The early pioneers in the process were RCNN and its subsequent improvements (Fast RCNN, Faster RCNN). Their implementation was based on the Region Proposal mechanism, and it was this mechanism that was primarily improved over the consequent versions. There was another approach to Object Detection where localization and classification were executed in a single step. Even though some networks performed single-stage Object Detection in the early stage, for instance, SSD (Single Shot Detector), it was YOLO that revolutionized the field in 2016. YOLO was able to localize the objects with bounding boxes and calculate a class score for them at once. Due to its incredible speed, YOLO was the go-to model for most of the real-time applications. Going along the same vein, Tsung-Yi Lin et al. released a paper, “Focal Loss for Dense Object Detection” which introduced a detector called the RetinaNet. It outperformed every other models in the market at that time. Before diving into the nitty-gritty of RetinaNet, I will discuss the concept of Focal Loss.

Focal Loss

Focal Loss was designed to be a remedy to class imbalance observed during dense detector training with Cross-Entropy Loss. By class imbalance, I mean (or the authors meant) the difference in the foreground and background classes, usually on the scale of 1:1000.

Let’s ignore the formulas for the time being. Consider the case of γ = 0, which corresponds to Cross Entropy. If you observe the curve, you can see that even for well-classified examples, the loss is non-trivial. Now, if I add in the problem of class imbalance, where there is an abundance of easy negative, it tends to overwhelm the foreground class.

To tackle this problem, the authors added a modulating factor with a tunable focusing parameter. Therefore, the formula of Focal Loss became:

If you notice, the negation and the log term makes up the Cross-Entropy Loss and γ represents the tunable parameter. If I consider a misclassified sample with low probability p_t, the modulating factor is effectively unchanged, whereas if the probability p_t is high (easily classifiable), then the loss function would tend to 0. Thereby, down-weighting the easily classified samples. So, this is how Focal Loss differentiates between easily classifiable and hard classifiable samples.

Finally, there is another addition to the loss function, i.e. the balancing factor α. α corresponds to a weightage factor either calculated with inverted class frequency or as a cross-validation optimized variable. The Focal Loss formula now becomes:

The authors have noted(through experiments) that the Focal Loss form doesn’t need to be exact. Instead, there are several forms in which the differentiation between the samples can be integrated.

RetinaNet

RetinaNet is actually a combination of networks consisting of a backbone and two task-specific subnetworks. The backbone network is a pure convolutional network responsible for computing convolutional feature maps over the entire image. The first subnet performs convolutional classification over the backbone’s output and the second subnet performs convolutional bounding box regression over the backbone’s output. The general architecture looks quite simple but the authors have tweaked each component to improve the results.

Feature Pyramid Network Backbone

The authors have implemented the Feature Pyramid Network (FPN) proposed by T. Y. Lin et al as the backbone network. FPN provides a rich, multi-scale feature pyramid by implementing a top-down approach with lateral connections. For RetinaNet, the FPN was built on top of ResNet architecture. The pyramid has 5 levels, P₃ to P₇, where the resolution can be computed as 2ˡ, where l corresponds to the pyramid level, in this case, 3 to 7.

Anchors

RetinaNet uses translation-invariant anchor boxes with areas from 32² to 512² on P₃ to P₇ levels respectively. To enforce a denser scale coverage, the anchors added, are of size {2⁰,2^(1/3),2^(2/3)}. So, there are 9 anchors per pyramid level. Each anchor is assigned a one-hot vector of classification targets K and a 4-vector of box regression targets.

Classification Subnet

The classification Subnet is an FCN attached to each pyramid level and its parameters are shared across all the levels. Considering the number of channels C as 256, Anchors A as 9, and K the number of classification targets, the feature map is fed through four 3×3 conv layers with C filters. That is followed by ReLU activations and another 3×3 conv layer but with K×A filters applied. In the end, sigmoid activations are attached to the output of the K×A binary predictions per spatial location. So, the final output becomes (W, H, K×A), where W and H represent the width and height of the feature map respectively. The authors included a little change where the parameters of the classification subnet are not shared with the regression subnet, to obtain better results.

Box Regression Subnet

If you refer to Figure 5, you can see that the classification subnet and regression subnet receive the feature map simultaneously. Hence, they operate parallelly. For regression, another small FCN is attached to each pyramid level to regress the offset from each anchor box to the nearby ground-truth object, if one exists. The entire structure is similar to the classification subnet but the difference lies in the output where instead of K×A, the output is 4×A. What the specific number ‘4’ represents are the parameters used to ascertain the offset, i.e. the center coordinates and the width & height. The object classification subnet and the box regression subnet, though sharing a common structure, use separate parameters.

Overall flow of the model

Let’s take a sample image and feed it to the network. First stop, FPN. Here, the image will be processed at different scales (4 levels), and at each level, it will output a feature map. The feature map from each level will be fed to the next bundle of components, i.e. Classification Subnet and Regression Subnet. Each feature map that the FPN outputs are then processed by the classification subnet and it outputs a tensor with shape (W, H, K×A). Similarly, the regression subnet will process the feature map and will output a (W, H, 4×A). Both these outputs are processed simultaneously and are sent to the loss function. The multi-task loss function in RetinaNet is made up of the modified focal loss for classification and a smooth L1 loss calculated upon 4×A channelled vector yielded by the Regression Subnet. Then the loss is backpropagated. So, this was the overall flow of the model. Next, let’s see how the model performed when compared to other Object Detection models.

How did it fare?

From the table above, it is pretty evident that the RetinaNet with a ResNeXt-101 backbone outperforms every other two-stage as well as the one-stage models proposed before it. The only category where it fails by 0.9 is the AP for large objects.

I want to implement RetinaNet, what should I look out for?

Certainly, every problem statement will be different and some parameters suggested by the authors might not work out for you. But the authors conducted rigorous research to get these optimal results. So, I feel they will work on general problems pretty well, or in the least, they can serve as a base parameter set and the user can tune them further.

- γ = 2 works well in practice and the RetinaNet is relatively robust to γ ∈ [0.5, 5]. α, the weight assigned to the rare class, also has a stable range, but it interacts with γ making it necessary to select the two together. In general, α should be decreased slightly as γ is increased. The configuration that worked the best for the authors was with γ = 2, α = 0.25.

- Anchors are assigned to ground-truth object boxes using an intersection-over-union (IoU) threshold of 0.5; and to background if their IoU is in [0, 0.4). As each anchor is assigned to at most one object box, the corresponding entry in its length K label vector is set to 1 and all other entries to 0. If an anchor is unassigned, which may happen with an overlap in [0.4, 0.5), it is ignored during training.

- To improve speed, only box predictions from at most 1k top-scoring predictions are decoded per FPN level, after thresholding detector confidence at 0.05. The top predictions from all levels are merged and non-maximum suppression with a threshold of 0.5 is applied to yield the final detections.

- All new conv layers except the final one in the RetinaNet subnets are initialized with bias b = 0 and a Gaussian weight fill with σ = 0.01. For the final conv layer of the classification subnet, the bias initialization is set to b = − log((1 − π)/π), where π specifies that the start of training every anchor should be labelled as foreground with confidence of ∼π. The value of π is 0.01 in all experiments.

Summary

To summarize, RetinaNet made a significant improvement to the Object detection field when it was launched. The idea that a one-stage detector outperforming a two-stage detector was quite surreal, yet RetinaNet made it happen. Since then there have been numerous new algorithms devised to further improve upon these results and as I go along discovering them, I am sure to document them into new articles. So, stay tuned for the next one!

I hope you liked my article and in case you want to contact me:

- My website: https://preeyonujboruah.tech/

- Github Profile: https://github.com/preeyonuj

- Previous Medium Article: https://towardsdatascience.com/the-implications-of-information-theory-in-machine-learning-707132a750e7

- LinkedIn Profile: www.linkedin.com/in/pb1807

References

[1] Focal Loss for Dense Object Detection — https://openaccess.thecvf.com/content_ICCV_2017/papers/Lin_Focal_Loss_for_ICCV_2017_paper.pdf