Removing Duplicate or Similar Images in Python

When training a machine learning model that uses images as input, it is relevant to check for similar or duplicated pictures in the dataset. Here is one of the most efficient ways to do this.

Introduction

One of the most naive approaches to detecting duplicate images would be to compare pixel by pixel by checking that all values are the same. However, this becomes very inefficient when testing a large number of images.

A second very common approach would be to extract the cryptographic hash of both images and compare if they are the same, using popular hash algorithms such as MD5 or SHA-1. Although this approach is much more efficient, it would only detect identical images. In case one of the pixels differs (due to a slight change in lighting, for example) the hashes would be completely different.

The reason for this is that the data used by these cryptographic algorithms is based only on the pixels of the image and a random seed. The same data and the same seed will produce the same result, but different data will generate different results.

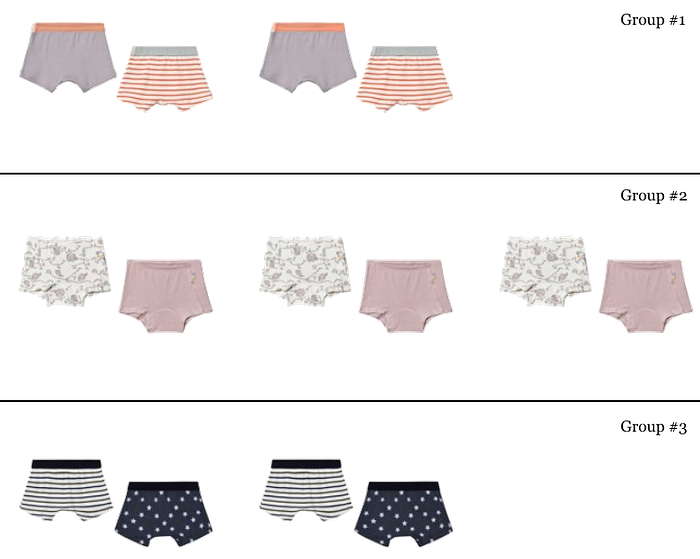

For our use case, it would make more sense for similar images to have similar hashes, right?

That is precisely the goal of image hashing algorithms. They focus on the structure of the image and its visual appearance to calculate the hash. Depending on your use case, some algorithms will be better than others.

For instance, if you want images with the same shape but different colors to have the same hash, perceptual or difference hashing could be used.

Keep in mind that there is always the possibility of using a larger image hash size and obtaining less similar images, which, depending on the use case, would have to be hyper-tuned.

Implementation

For use in Python, I recommend the ImageHash library, which supports multiple image hashing algorithms, such as average, perceptual, difference, wavelet, HSV-color, and crop-resistant.

It can be easily installed with the following command:

pip install imagehashAs stated above, each algorithm can have its hash size adjusted, the higher this size is, the more sensitive to changes the hash is. Based on my experience, I recommend using the dhash algorithm with hash size 8 and the z-transformation available in the repository.

Below you can see the code needed to load an image, transform it and calculate the d-hash, which is encapsulated in the dhash_z_transformed method.

To apply it in a data pipeline, simply call the dhash_z_transformed method with the path of the image you want to hash. If the method produces the same hash for two images, it means that they are very similar. You can then choose to remove duplicates by keeping one copy or neither, depending on your use case.

I encourage you to adjust the sensitivity threshold by changing the hash_size for finer results to your use case.

Conclusions

In this short post, I have presented an important step to take into account when training machine learning models that receive images as input.

Instead of showing how to detect only identical images, I introduce image hashing algorithms to identify also similar images (different size but same image, slight changes in brightness…). Finally, I provide examples of how to compute these image hashes in Python using an external library.

If you want to discover more posts like this one, you can find me at: