Powerful Feature Selection with Recursive Feature Elimination (RFE) of Sklearn

Get the same model performance even after dropping 93 features

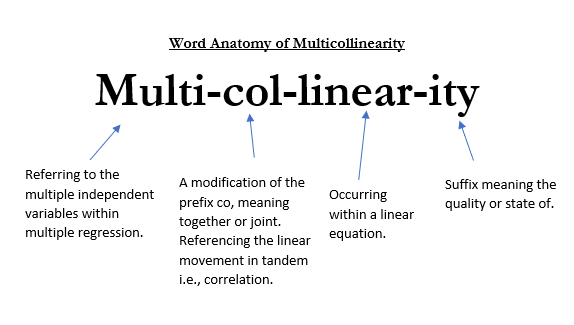

The basic feature selection methods are mostly about individual properties of features and how they interact with each other. Variance thresholding and pairwise feature selection are a few examples that remove unnecessary features based on variance and the correlation between them. However, a more pragmatic approach would select features based on how they affect a particular model’s performance. One such technique offered by Sklearn is Recursive Feature Elimination (RFE). It reduces model complexity by removing features one by one until the optimal number of features is left.

It is one of the most popular feature selection algorithms due to its flexibility and ease of use. The algorithm can wrap around any model, and it produces the best possible set of features that gives the highest performance. By completing this tutorial, you will learn how to use its implementation in Sklearn.

The idea behind Recursive Feature Elimination

Consider this subset of the Ansur Male dataset: