For anyone starting on a serverless journey, AWS Lambda is just amazing. You write the code, invoke the function and it does the job for you. Hold on, is it really doing as expected? I mean what about the execution time? How do I ensure that my lambda function is performing optimally with minimum costs? This is the question that ponders everyone when they start to productionize lambda functions. Believe me, the kind of questions I get around lambda functions is just crazy:

- Why allocate more memory when the function is CPU intensive?

- What is the CPU utilization of the Lambda function?

- Increasing memory increases costs. Keep a check on how much memory you allocate.

What I found confusing when I first began using lambda was, AWS has the option to allocate memory for the lambda function. There is no option to allocate CPU. The CPU scales as you increase memory. To understand how it works, let’s take a simple example and run it with different memory limits.

I have written a simple function that prints the prime numbers between ‘lower’ and ‘upper’ values. The code is below:

import jsonprint('Loading function')def lambda_handler(event, context):

lower = 2

upper = 50000

print("Prime numbers between", lower, "and", upper)

for num in range(lower, upper + 1):

if(num > 1):

for i in range(2, num):

if (num % i) == 0:

break

else:

print(num)return {

'statusCode': 200,

'body': json.dumps("Function executed successfully")

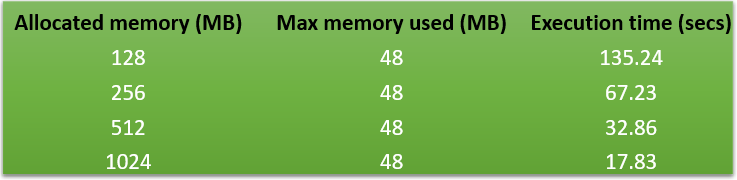

}I ran the above function with 4 memory limits (128 MB, 256 MB, 512 MB, and 1024 MB). Below are the results:

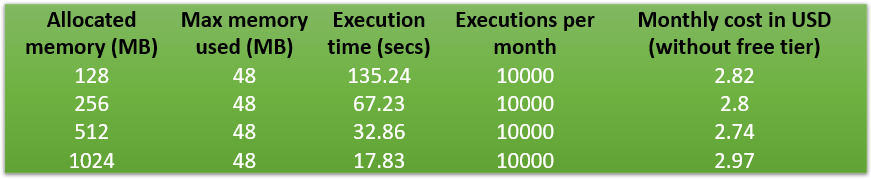

As you can see, "Max memory used" is always around 48 MB. However, what is interesting to see is the "Execution time". As I increased the memory, the execution time came down (from 135 secs for 128 MB to 17 secs for 1024 MB). This proves that a higher CPU is getting allocated as I increase the memory. But what about the costs? Will I incur higher costs with increased memory? To understand the cost implications, I used the AWS calculator. I chose to find out costs without free tier for 10000 monthly executions and this is what I got:

Looking at the numbers, I was amazed. For 128 MB memory, my execution time was around 135 secs and the cost was $2.82. Increasing the memory to 1024 MB, gave me a drastic improvement in execution time without increasing the costs too much. So, it’s not always true that increasing memory increases costs.

The question is how to allocate optimal memory for lambda functions? I mean, I cannot keep doing this calculation for every function which I write. So I started searching online to see if there is a tool to do this and give me the best combination for memory, execution time, and cost. And guess what, google, as always helped me to get the answer. There is an independent project written by Alex Casalboni on GitHub which does the job. (https://github.com/alexcasalboni/aws-lambda-power-tuning)

Using AWS Lambda Power Tuning

You can read about how to install the power tuner in the GitHub article https://github.com/alexcasalboni/aws-lambda-power-tuning/blob/master/README-DEPLOY.md.

I went ahead and deployed with option 1, serverless application repository. Once deployed, it was time to execute it for my lambda function. Again there are multiple options to execute as given in the article https://github.com/alexcasalboni/aws-lambda-power-tuning/blob/master/README-EXECUTE.md

I executed with Option 3: Execute the state machine manually (web console) by using the below input:

- lambdaARN → arn of the lambda function under test.

- powerValues → The list of memory values to be tested

- num → number of invocations for each configuration

- strategy → can be

"cost"or"speed"or"balanced".I used balanced in my tests.

You can read more about other input values in this link: https://github.com/alexcasalboni/aws-lambda-power-tuning/blob/master/README-INPUT-OUTPUT.md

Once I executed the state machine, I got the below results (NOTE: I reduced the ‘upper’ value to 10000 for this test)

As seen from the above depiction, The best cost is with 512 MB of memory. But when we look at 1024 MB of memory, we will have slightly higher costs, but the execution time reduces drastically. I found this tool to be perfect to measure the memory requirements for lambda functions. You can play with different combinations of values in input as per your requirements and then take a decision rather than going with some ad-hoc memory allocation and expecting things to work properly.