Is Learning Rate Useful in Artificial Neural Networks?

This article will help you understand why we need the learning rate and whether it is useful or not for training an artificial neural network. Using a very simple Python code for a single layer perceptron, the learning rate value will get changed to catch its idea.

Introduction

An obstacle for newbies in artificial neural networks is the learning rate. I was asked many times about the effect of the learning rate in the training of the artificial neural networks (ANNs). Why we use learning rate? What is the best value for the learning rate? In this article, I will try to make things simpler by providing an example that shows how learning rate is useful in order to train an ANN. I will start by explaining our example with Python code before working with the learning rate.

Example

A very very simple example is used to get us out of complexity and allow us to just focus on the learning rate. A single numerical input will get applied to a single layer perceptron. If the input is 250 or smaller, its value will get returned as the output of the network. If the input is larger than 250, then it will be clipped to just 250. Figure 1 shows a table with the 6 samples used for training.

ANN Architecture

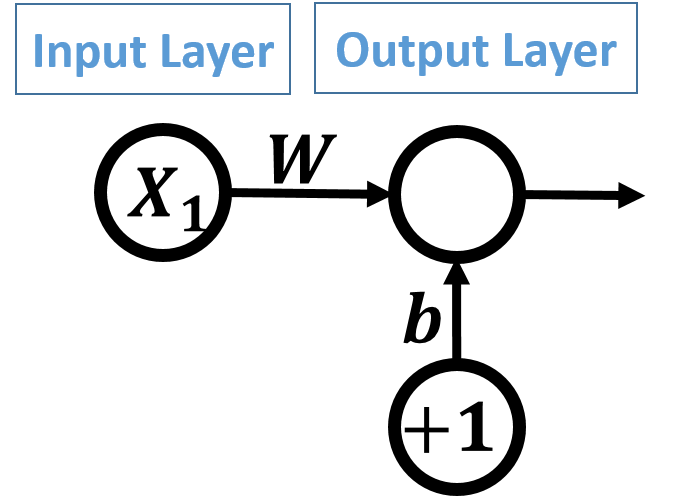

The architecture of the ANN used is shown in figure 2. There are just input and output layers. The input layer has just a single neuron for our single input. The output layer has just a single neuron for generating the output. The output layer neuron is responsible for mapping the input to the correct output. There is also a bias applied to the output layer neuron with weight b and value +1. There is also a weight W for the input.

Activation Function

The equation and the graph of the activation function used in this example are as shown figure 3. When the input is below or equal to 250, the output will be the same as the input. Otherwise, it will be clipped to 250.

Implementation using Python

The Python code implementing the entire network is shown below. We will discuss all of it until making it easy as much as possible then focus on changing the learning rate to find out how it affects the network training.

1. import numpy

2.

3. def activation_function(inpt):

4. if(inpt > 250):

5. return 250 # clip the result to 250

6. else:

7. return inpt # just return the input

8.

9. def prediction_error(desired, expected):

10. return numpy.abs(numpy.mean(desired-expected)) # absolute error

11.

12. def update_weights(weights, predicted, idx):

13. weights = weights + .00001*(desired_output[idx] - predicted)*inputs[idx] # updating weights

14. return weights # new updated weights

15.

16. weights = numpy.array([0.05, .1]) #bias & weight of input

17. inputs = numpy.array([60, 40, 100, 300, -50, 310]) # training inputs

18. desired_output = numpy.array([60, 40, 150, 250, -50, 250]) # training outputs

19.

20. def training_loop(inpt, weights):

21. error = 1

22. idx = 0 # start by the first training sample

23. iteration = 0 #loop iteration variable

24. while(iteration < 2000 or error >= 0.01): #while(error >= 0.1):

25. predicted = activation_function(weights[0]*1+weights[1]*inputs[idx])

26. error = prediction_error(desired_output[idx], predicted)

27. weights = update_weights(weights, predicted, idx)

28. idx = idx + 1 # go to the next sample

29. idx = idx % inputs.shape[0] # restricts the index to the range of our samples

30. iteration = iteration + 1 # next iteration

31. return error, weights

32.

33. error, new_weights = training_loop(inputs, weights)

34. print('--------------Final Results----------------')

35. print('Learned Weights : ', new_weights)

36. new_inputs = numpy.array([10, 240, 550, -160])

37. new_outputs = numpy.array([10, 240, 250, -160])

38. for i in range(new_inputs.shape[0]):

39. print('Sample ', i+1, '. Expected = ', new_outputs[i], ' , Predicted = ', activation_function(new_weights[0]*1+new_weights[1]*new_inputs[i]))Lines 17 and 18 are responsible for creating two arrays (inputs and desired_output) holding the training input and output data presented in the previous table. Each input will have an output according to the activation function used.

Line 16 creates an array of the network weights. There are just two weights: one for the input and another for the bias. They were randomly initialized to 0.05 for the bias and 0.1 for the input.

The activation function itself is implemented using the activation_function(inpt) method from line 3 to 7. It accepts a single argument which is the input and returns a single value which is the expected output.

Because there may be an error in the prediction, we need to measure that error to know how far we are from the correct prediction. For that reason, there is a method implemented from line 9 to 10 called prediction_error(desired, expected) that accepts two inputs: the desired and expected outputs. That method just calculates the absolute difference between each desired and expected outputs. The best value for any error is for sure 0. This is the optimal value.

What if there was a prediction error? In this case, we must make a change to the network. But what exactly to change? It is the network weights. For updating the network weights, there is a method called update_weights(weights, predicted, idx) defined from line 13 to 14. It accepts three inputs: old weights, predicted output, and the index of the input that has a false prediction. The equation used to update the weights is shown in figure 4.

The equation uses the weights of the current step (n) to generate the weights of the next step (n+1). This equation is what we will use for knowing how the learning rate affects the learning process.

Finally, we need to concatenate all of these together to make the network learn. This is done using the training_loop(inpt, weights) method defined from line 20 to 31. It goes into a training loop. The loop is used to map the inputs to their outputs with the least possible prediction error.

The loop does three operations:

- Output Prediction.

- Error Calculation.

- Updating Weights.

After getting the idea of the example and its Python code, let us start showing how the learning rate is useful in order to get the best results.

Learning Rate

In the previously discussed example, line 13 has the weights update equation in which the learning rate is used. At first, let us assume that we have not used the learning rate completely. The equation will as follows:

weights = weights + (desired_output[idx] — predicted)*inputs[idx]Let us see the effect of removing the learning rate. In the iteration of the training loop, the network has the following inputs (b=0.05 and W=0.1, Input = 60, and desired output=60).

The expected output which is the result of the activation function as in line 25 will be activation_function(0.05(+1) + 0.1(60)). The predicted output will be 6.05.

In line 26, the prediction error will be calculated by getting the difference between the desired and the predicted output. The error will be abs(60–6.05)=53.95.

Then in line 27 the weights will get updated according to the above equation. The new weights will be [0.05, 0.1] + (53.95)*60 = [0.05, 0.1] + 3237 = [3237.05, 3237.1].

It seems that the new weights are too different from the previous weights. Each weight got increased by 3,237 which is too large. But let us continue making the next prediction.

In the next iteration, the network will have these inputs applied: (b=3237.05 and W=3237.1, Input = 40, and desired output=40). The expected output will be activation_function((3237.05 + 3237.1(40)) = 250. The prediction error will be abs(40–250) = 210. The error is very large. It is larger than the previous error. Thus we have to update the weights again. According to the above equation, the new weights will be [3237.05, 3237.1] + (-210)*40 = [3237.05, 3237.1] + -8400 = [-5162.95, -5162.9].

The table shown in figure 5 summarizes the results of the first three iterations.

As we go into more iterations, the results get worse. The magnitude of the weights is changing rapidly and sometimes with changing its signs. They are moving from very large positive value to very large negative value. How can we stop this large and abrupt changes in the weights? How to scale down the value by which the weights are updated?

If we looked at the value by which the weights are changing by from the previous table, it seems that the value is very large. This means that the network changes its weights with large speed. It is like someone that makes large moves within small times. At one time, the person is in the far east and after a very short time, that person will be in the far west. We just need to make it slower.

If we are able to scale down this value to get smaller then everything will be alright. But how?

Getting back to the part of the code that generates this value, it looks that the update equation is what generates it. Specifically this part:

(desired_output[idx] — predicted)*inputs[idx]We can scale this part by multiplying it by a small value such as 0.1. So, rather than generating 3237.0 as the updated value in the first iteration, it will be reduced to just 323.7. We can even scale this value to a smaller value by decreasing the scale value to say 0.001. Using 0.001, the value will be just 3.327.

We can catch it now. This scaling value is the learning rate. Choosing small values for the learning rate makes the rate of weights update smaller and avoids abrupt changes. As the value gets larger as the changes are faster and as a result bad results.

But what is the best value for the learning rate?

There is no value we can say it is the best value for the learning rate. The learning rate is a hyperparameter. A hyperparameter has its value determined by experiments. We try different values and use the value that gives best results. There are some ways that just helps you select values of hyperparameters.

Testing Network

For our problem, I deduced that a value of .00001 works fine. After training the network with that learning rate, we can make a test. The table in figure 6 shows the results of prediction of 4 new testing samples. It seems that results are now much better after using the learning rate.

The original article is available at LinkedIn in this link: https://www.linkedin.com/pulse/learning-rate-useful-artificial-neural-networks-ahmed-gad/

It is also shared at KDnuggets in this page: https://www.kdnuggets.com/2018/01/learning-rate-useful-neural-network.html

The article reshared at TDS on 28–6–2018.

For contacting the author

Ahmed Fawzy Gad

LinkedIn: https://linkedin.com/in/ahmedfgad

E-mail: ahmed.f.gad@gmail.com