Price Elasticity of Demand, Statistical Modeling with Python

How to maximize profit

Price elasticity of demand (PED) is a measure used in economics to show the responsiveness, or elasticity, of the quantity demanded of a good or service to a change in its price when nothing but the price changes. More precisely, it gives the percentage change in quantity demanded in response to a one percent change in price.

In economics, elasticity is a measure of how sensitive demand or supply is to price.

In marketing, it is how sensitive consumers are to a change in price of a product.

It gives answers to questions such as:

- “If I lower the price of a product, how much more will sell?”

- “If I raise the price of one product, how will that affect sales of the other products?”

- “If the market price of a product goes down, how much will that affect the amount that firms will be willing to supply to the market?”

We will build a linear regression model to estimate PED, and we will use Python’s Statsmodels to estimate our models as well as conduct statistical tests, and data exploration. Let’s get started!

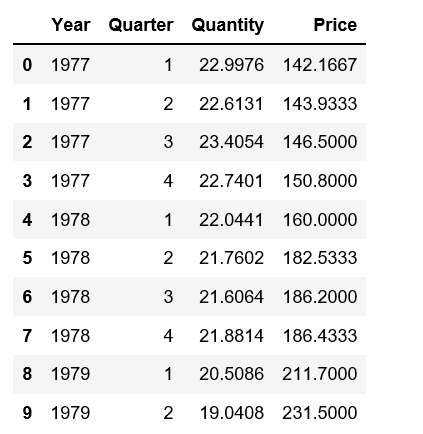

The data

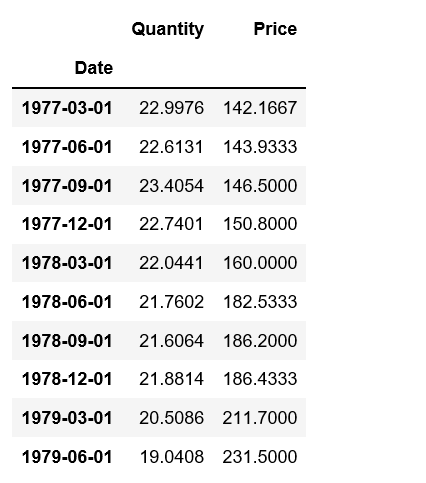

We will work with the beef price and demand data that can be downloaded from here.

%matplotlib inlinefrom __future__ import print_function

from statsmodels.compat import lzip

import numpy as np

import pandas as pd

import matplotlib.pyplot as plt

import statsmodels.api as sm

from statsmodels.formula.api import olsbeef = pd.read_csv('beef.csv')

beef.head(10)

Regression Analysis

Ordinary Least Squares (OLS) Estimation

beef_model = ols("Quantity ~ Price", data=beef).fit()

print(beef_model.summary())

Observations:

- The small P values indicate that we can reject the null hypothesis that Price has no effect on Quantity.

- Hight R-squared indicates that our model explains a lot of the response variability.

- In regression analysis, we’d like our regression model to have significant variables and to produce a high R-squared value.

- We will show graphs to help interpret regression analysis results more intuitively.

fig = plt.figure(figsize=(12,8))

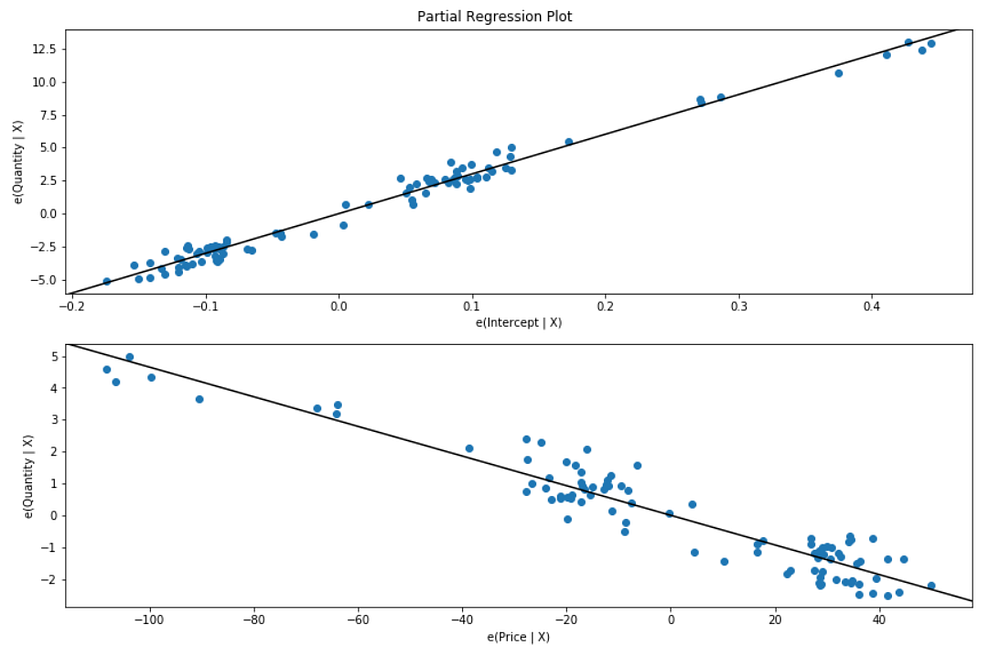

fig = sm.graphics.plot_partregress_grid(beef_model, fig=fig)

The trend indicates that the predictor variables (Price) provides information about the response (Quantity), and data points do not fall further from the regression line, and the predictions are very precise given a prediction interval that extends from about 29 to 31.

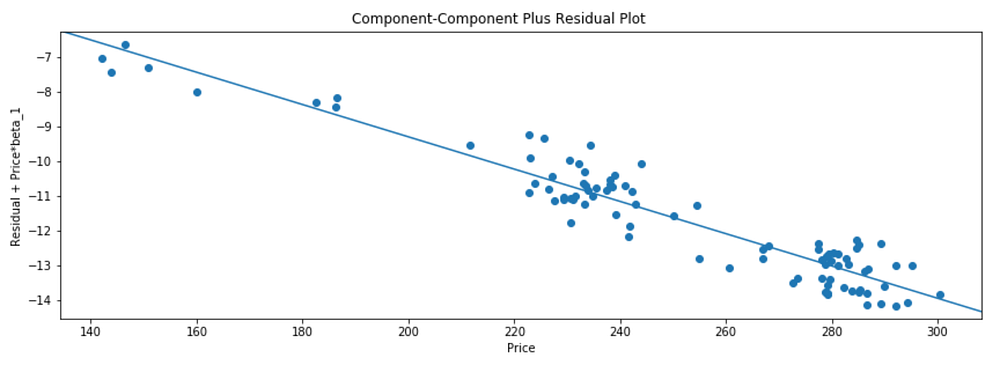

Component-Component plus Residual (CCPR) Plots

The CCPR plot provides a way to judge the effect of one regressor on the response variable by taking into account the effects of the other independent variables.

fig = plt.figure(figsize=(12, 8))

fig = sm.graphics.plot_ccpr_grid(beef_model, fig=fig)

As you can see the relationship between the variation in Quantity explained by Price is definite linear. There are not many observations that are exerting considerable influence on the relationships.

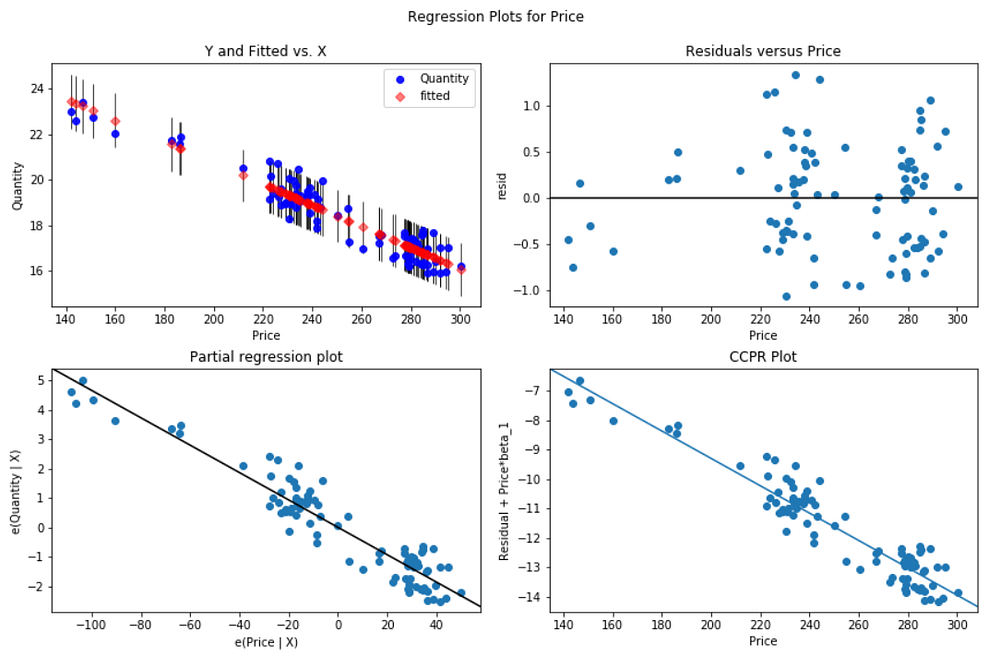

Regression plots

We use plot_regress_exog function to quickly check model assumptions with respect to a single regressor, Price in this case.

fig = plt.figure(figsize=(12,8))

fig = sm.graphics.plot_regress_exog(beef_model, 'Price', fig=fig)

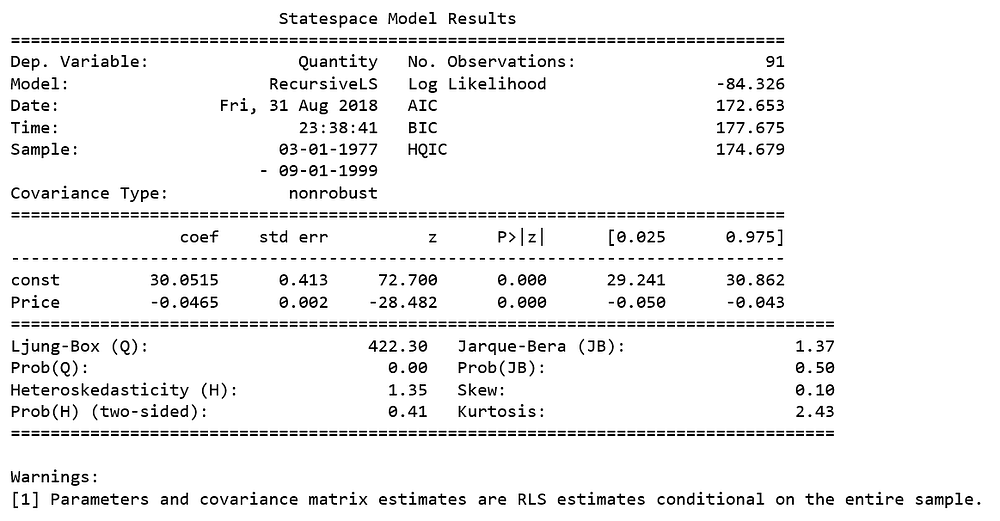

Recursive Least Square (RLS)

Finally we apply Recursive Least Square (RLS) filter to investigate parameter instability.

Before RLS estimation, we will manipulate the data and create a date time index.

beef['Year'] = pd.to_datetime(beef['Year'], format="%Y")from pandas.tseries.offsets import *

beef['Date'] = beef.apply(lambda x:(x['Year'] + BQuarterBegin(x['Quarter'])), axis=1)

beef.drop(['Year', 'Quarter'], axis=1, inplace=True)

beef.set_index('Date', inplace=True)beef.head(10)

RLS estimation

endog = beef['Quantity']

exog = sm.add_constant(beef['Price'])mod = sm.RecursiveLS(endog, exog)

res = mod.fit()print(res.summary())

The RLS model computes the regression parameters recursively, so there are as many estimates as there are data points, the summary table only presents the regression parameters estimated on the entire sample; these estimates are equivalent to OLS estimates.

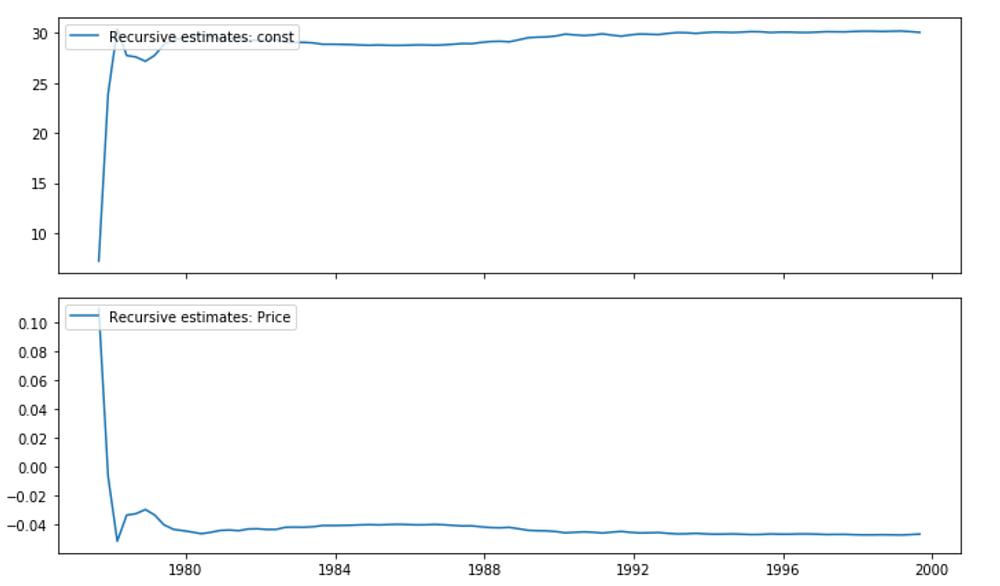

RLS plots

We can generate the recursively estimated coefficients plot on a given variable.

res.plot_recursive_coefficient(range(mod.k_exog), alpha=None, figsize=(10,6));

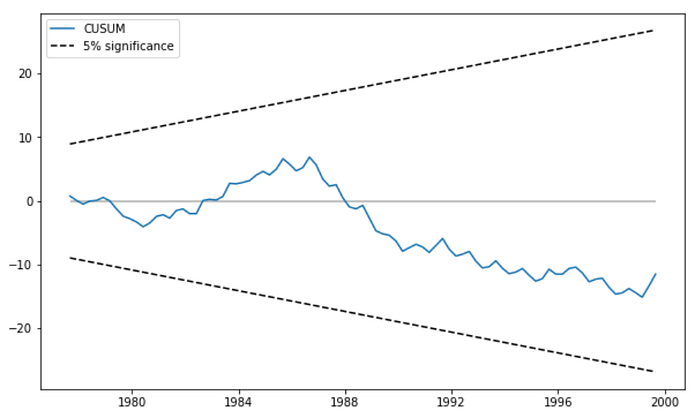

For convenience, we visually check for parameter stability using the plot_cusum function.

fig = res.plot_cusum(figsize=(10,6));

In the plot above, the CUSUM statistic does not move outside of the 5% significance bands, so we fail to reject the null hypothesis of stable parameters at the 5% level.

Source code can be found on Github. Have a wonderful long weekend!

References: