Hands-on Tutorials

An Overview of Data Preprocessing: Features Enrichment, Automatic Feature Selection

Useful feature engineering methods with python implementation in one view

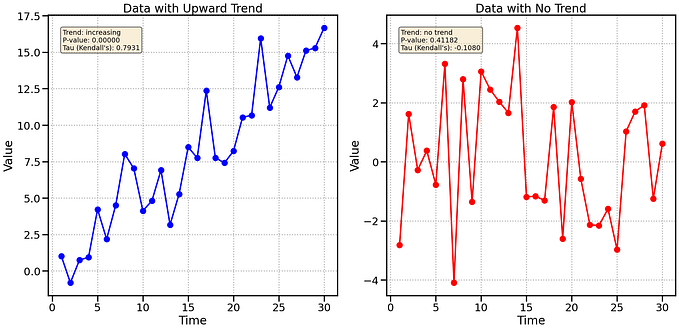

The dataset should render suitable for the data trained in Machine Learning and the prediction made by the algorithm to yield more successful results. Looking at the dataset, It is seen that some features are more important than others, that is, they have more impact on the output. For example, better results are obtained when replacing with the logarithmic values of the dataset or other mathematical operations such as square root, exponential can be more efficient for the results. The distinction to be made here is to choose the data preprocessing method suitable for the model and the project. This article contains different angles to look at the dataset to make it easier for algorithms to learn the dataset. All studies are made more understandable with python applications.

Table of Contents (TOC)

1. Binning

2. Polynomial & Interaction Features

3. Non-Linear Transform

3.1. Log Transform

3.2. Square Root Transform

3.3. Exponential Transform

3.4. Box-cox Transform

3.5. Reciprocal Transform

4. Automatic Feature Selection

4.1. Analysis of Variance (ANOVA)

4.2. Model-Based Feature Selection

4.3. Iterative Feature Selection